Data Extraction with Chris Mercer

39,00 $

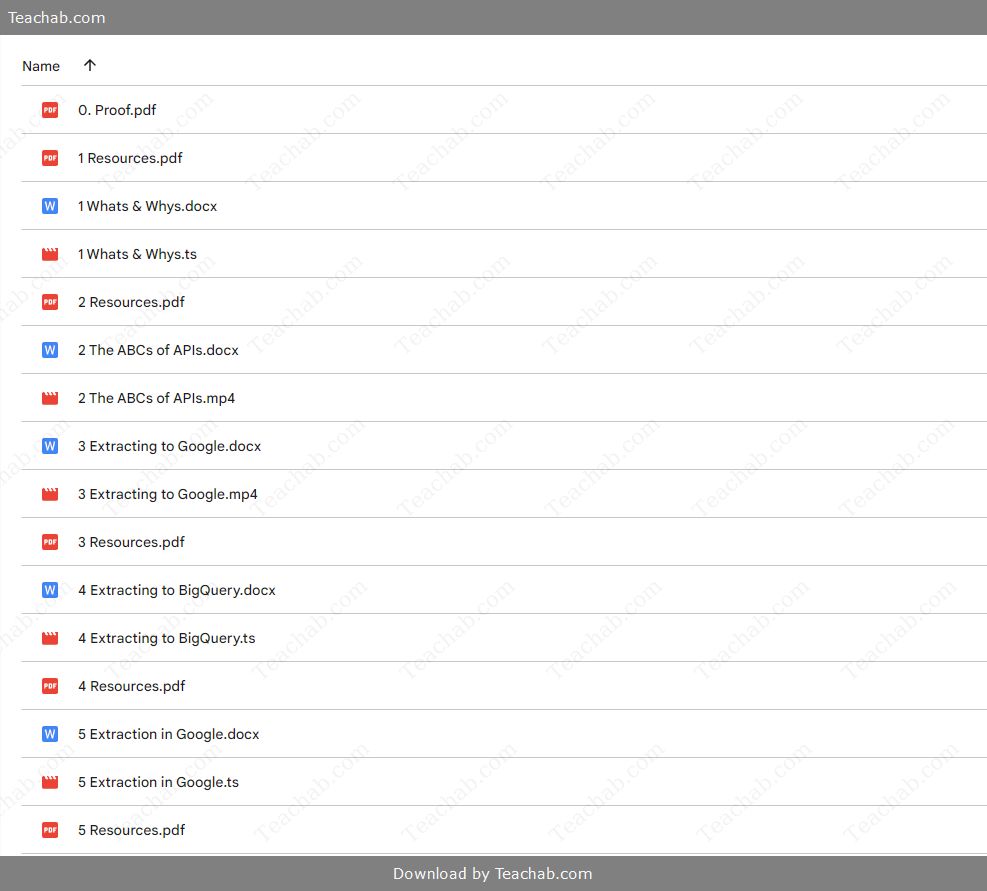

You may check content proof of “Data Extraction with Chris Mercer” below:

Data Extraction – Chris Mercer

Data extraction is a fundamental process in the world of research, data analysis, and business intelligence. It serves not only as a bridge between raw data and actionable insights but also shapes the integrity of the conclusions drawn from such research. Chris Mercer, known for his contributions to quantitative marketability discount modeling, highlights the importance of data extraction within various frameworks, especially in systematic reviews and data synthesis.

In this article, we will explore an extensive range of data extraction techniques, their importance in systematic reviews, common methods utilized by Chris Mercer, the tools and software solutions available, best practices, pitfalls, the pivotal role of the PICO framework, and the future trends in data extraction. Each section will delve into intricate details that solidify the significance of masterful data extraction in generating reliable analytical outcomes.

Overview of Data Extraction Techniques

Data extraction techniques resemble the diverse tools found in a craftsman’s toolkit, each serving a purpose best suited to particular tasks. Manual data extraction, a traditional approach, involves researchers elucidating relevant data from research articles and inputting it into designated formats. While effective, it mirrors digging for gems in a vast landscape time-consuming and occasionally prone to biases or oversights, especially in extensive literature reviews where numerous studies are involved.

In contrast, automated and semi-automated techniques utilize sophisticated tools and algorithms to expedite the extraction process. Picture this approach as a well-oiled machine, efficiently mining data from vast databases and extracting value in structured formats. These methodologies pivot on technology, using machine learning to perform guided extractions based on predefined criteria; an efficient alternative that mitigates human error.

Additionally, the development of tailored data extraction forms can enhance data consistency and capture vital study components. This can be compared to employing a blueprint before building a house ensuring that every necessary feature is meticulously accounted for.

Lastly, adhering to standardized guidelines, such as the Cochrane Handbook or PRISMA, affirms that essential data is comprehensively gathered. By following structured recommendations, researchers can ensure their individual extraction methodologies align, enhancing the overall quality of the review.

| Technique/Method | Characteristics | Pros | Cons |

| Manual Data Extraction | Human-centric data collection | High precision, familiarity | Time-consuming, prone to biases |

| Automated Techniques | Use of software tools for fast data collection | Labor-saving, scalable | Potentially less accuracy without proper setup |

| Tailored Extraction Forms | Custom data collection frameworks | Consistency and relevant data capture | Requires development effort |

| Standardized Guidelines | Established protocols for data extraction | Enhances quality and transparency | May restrict creativity in unique cases |

By employing an array of techniques tailored to specific research circumstances, data extraction can evolve from a rudimentary process into a comprehensive system that delivers quality outputs essential for scholarly and business endeavors.

Importance of Data Extraction in Systematic Reviews

Data extraction serves as the cornerstone of systematic reviews, functioning as the essential step connecting research selection to substantive analysis. It is akin to the meticulous process of gathering raw materials needed for crafting a masterpiece; the quality and relevance of this data dictate the final outcome of the project.

Firstly, data extraction lays the foundation for analysis. The subsequent steps in systematic reviews whether qualitative or quantitative are directly dependent on the quality of the extracted data. If the data is deficient or inaccurately represented, it can lead to misleading meta-analytical conclusions, making the integrity of each data point crucial.

Moreover, it allows for a comprehensive risk of bias assessment. Evaluating the quality and credibility of studies is critical to ensuring that the review’s conclusions are valid. By systematically extracting pertinent information about potential biases, the risk assessment enables reviewers to navigate through the complexities of research quality.

Another essential aspect of data extraction is its impact on the generalizability of findings. A well-executed extraction process equips researchers with the necessary details to understand the populations and contexts being studied. This thorough breakdown allows for robust comparisons and concludes whether findings are applicable to broader settings or simply localized phenomena.

Lastly, the principle of “garbage in, garbage out” emphasizes the importance of precise data extraction. Poor extraction practices lead to erroneous conclusions, resulting in invalid findings. Consequently, employing good practices in data extraction minimizes errors and enhances review reliability, directly affecting stakeholders reliant on these compiled results.

| Key Aspects | Description | Impact on Systematic Reviews |

| Foundation for Analysis | Basis for all analyses in the review | Essential for accurate conclusions |

| Risk of Bias Assessment | Evaluating study quality | Validates reliability of final findings |

| Generalizability of Findings | Contextual understanding of results | Determines the applicability of conclusions |

| Quality Assurance | Ensuring accuracy and completeness | Minimizes risk of erroneous outputs |

Thus, the significance of proficient data extraction techniques cannot be overstated, forming the backbone of systematic reviews and ensuring their credibility and applicability.

Common Data Extraction Methods Employed by Chris Mercer

Chris Mercer, primarily known for methods in business valuation, also emphasizes data extraction in his approach. His practices can be likened to a craftsman who meticulously gathers rare resources to build a solid framework for evaluation. Here, we explore some common data extraction methods prominent in his methodologies, particularly in quantitative analysis:

- Quantitative Marketability Discount Model (QMDM): The QMDM exemplifies systematic data extraction, where cash flows, growth rates, and risks are methodically evaluated, ensuring relevant data points are captured. By employing predefined formulas and rules derived from market analysis, Mercer standardizes the extraction process for valuation.

- Statistical Sampling Techniques: Mercer incorporates various statistical techniques to extract representative data samples for analytical rigor. This approach ensures that extracted data sets reflect broader trends without succumbing to biases, maintaining a comprehensive perspective.

- Cross-Referencing: By cross-referencing extracted data from multiple sources, Mercer enhances the integrity of his analyses. This practice mirrors creating a mosaic gathering diverse pieces to depict a coherent whole, which enriches the credibility of the conclusions drawn.

- Multi-Factor Analysis: Employed in evaluating market trends, this method involves extracting diverse factors influencing marketability discounts. It acknowledges that multiple elements interplay within valuations, leading to a more robust analysis.

- Data Validation Protocols: Implementing validation measures during extraction ensures the data’s quality and relevance. Mercer emphasizes the verification of data from original sources, akin to confirming the authenticity of an artifact before appraising its value.

In summation, Chris Mercer’s application of these data extraction methods amplifies the integrity and depth of his analyses, showing that precision and diligence in data extraction are integral for high-quality evaluations.

Tools and Systems for Data Extraction

The emergence of various tools and systems tailored for data extraction resembles the rapid evolution of technology in our daily lives. Just as smartphones have enhanced communication, dedicated platforms facilitate efficient data extraction. A brief overview of the most significant tools currently shaping the landscape follows below:

- Airbyte: An open-source data integration tool that provides a plethora of connectors suitable for both full and incremental data extraction. Its flexibility allows users to navigate diverse data environments effortlessly.

- Octoparse: This user-friendly web scraping tool alleviates complexities by enabling users to extract data without extensive coding expertise. With features such as scheduled tasks and automated data cleaning, it suits both beginners and seasoned analysts.

- Import.io: A cloud-based solution designed for web data extraction, Import.io transforms semi-structured data into structured formats. Its capability to handle dynamic websites makes it an invaluable tool for researchers seeking relevant information.

- Alteryx: By merging data extraction capabilities with advanced analytics, Alteryx stands out as a powerful platform for data analysts, making complex analyses accessible to a broader audience.

| Tool/Software | Features | Target Users |

| Airbyte | Open-source, broad suite of connectors | Data engineers, programmers |

| Octoparse | No-code web scraping, task scheduling | Non-tech users, market researchers |

| Import.io | Cloud-based, semi-structured data conversion | Researchers, data analysts |

| Alteryx | Integrated analytics and data preparation | Business analysts, data scientists |

These tools illustrate how the world of data extraction is increasingly sophisticated, transforming how researchers, analysts, and organizations handle data.

Software Solutions for Streamlining Data Extraction

As the demand for effective data handling rises, software solutions designed to facilitate data extraction processes have become crucial. These solutions range from simple applications to comprehensive platforms catering to various needs and capabilities. Below are notable software tools:

- Apify: Primarily focused on web scraping and automation, Apify allows users to create custom data extraction tools efficiently. With its Crawlee library, users can build reliable scrapers that support multiple export formats, catering to a wide range of data requirements.

- Hevo Data: An ETL (Extract, Transform, Load) solution that allows businesses to consolidate data from multiple sources real-time. Hevo’s pre-built connectors facilitate seamless integrations, thus bolstering business intelligence efforts.

- Data Miner: A powerful Chrome extension simplifying the extraction of web data directly into spreadsheets. Its focus on structured and unstructured data makes it useful for quick tasks without elaborate setups.

- Fivetran: Known for automating data movement and replication, Fivetran offers extensive pre-built connectors. It is essential for companies that require instant, real-time data integration without extensive development resources.

| Software Solution | Primary Function | Key Advantages |

| Apify | Web scraping and automation | Custom tool creation, various data formats |

| Hevo Data | ETL service for real-time data integration | Pre-built connectors, consolidation from sources |

| Data Miner | Browser extension for web data extraction | Simple interface, quick access |

| Fivetran | Automating data movement and replication | Real-time integration, extensive library |

These software solutions showcase how the data extraction process can be streamlined and optimized, enabling firms to focus on leveraging data effectively for informed decision-making.

Comparative Analysis of Data Extraction Tools

To aid organizations in selecting suitable data extraction tools for their specific needs, it is essential to perform a comparative analysis. The following characteristics will facilitate a deeper understanding of various options:

- User-Friendliness: Tools like Octoparse and Data Miner shine for their intuitive interfaces, granting non-technical users direct access to data extraction capabilities without requiring extensive training.

- Automation Capabilities: Solutions such as Apify, Hevo Data, and Fivetran excel in automating both extraction and integration processes, allowing businesses to manage large data volumes efficiently.

- Customization and Flexibility: Apify and Condor offer robust customization options, allowing users to tailor extraction processes according to specific requirements, catering to unique business needs.

- Integration with Other Systems: Hevo Data and Fivetran provide extensive options for connecting with various platforms, enhancing data utility for businesses relying on multiple sources.

- Cost-Effectiveness: While tools like Octoparse offer accessible pricing for smaller businesses, enterprise-level solutions might require higher investment upfront but often offer greater scalability and robust capabilities.

| Tool/Software | User-Friendliness | Automation | Customization | Integration | Cost-Effectiveness |

| Apify | Moderate | High | Excellent | High | Varies |

| Octoparse | Excellent | Moderate | Fair | Low | Accessible |

| Hevo Data | Fair | High | Fair | Excellent | Moderate |

| Fivetran | Moderate | High | Fair | High | High |

The choice of a data extraction tool must be guided by each organization’s specific needs, existing infrastructure, and budgetary constraints, ensuring optimal outcomes.

Best Practices in Data Extraction

Implementing best practices in data extraction not only enhances efficiency but also ensures reliability in the outcomes derived from the process. Here are pivotal recommendations:

- Development and Use of Data Extraction Forms: Design standardized extraction forms aligned with the research focus to eliminate redundancy and ensure clarity. These templates serve as essential tools that can guide data collation uniformly across studies.

- Streamlining Processes: Utilizing modern software tools tailored for systematic reviews can reduce manual effort and speed up collection processes. Automating repeated tasks fosters smooth workflow transitions in busy operations.

- Pilot Testing: Before deploying data extraction tools fully, it’s crucial to conduct pilot tests to identify potential bottlenecks or issues. This proactive approach encourages continuous improvement based on real-world observations.

- Clear Definition of Data Categories: Clearly outlining categories such as study characteristics, population inputs, interventions, and anticipated outcomes guides precise extractions. This leads to effective data organization and comparison.

- Regular Review and Updating of Guidelines: Keeping abreast of evolving methodologies, technologies, and best practices ensures that your extraction process retains its effectiveness over time.

| Best Practice | Description | Impact |

| Development of Extraction Forms | Creation of standardized templates | Improves consistency and relevance |

| Streamlining Processes | Automation and simplifying tasks | Increases efficiency and productivity |

| Pilot Testing | Testing tools on small samples | Identifies issues before full implementation |

| Defined Data Categories | Establishing clear categories for data | Ensures precise extraction |

| Review of Guidelines | Keeping methodologies updated | Enhances continuing best practices |

Through these practices, organizations can refine their data extraction processes, ensuring they yield high-quality data that enhances analytical insights.

Guidelines for Effective Data Extraction

Incorporating effective guidelines into data extraction processes is crucial for successful and reliable outcomes. These guidelines should encompass the following principles:

- Establish Clear Objectives: Before initiating data extraction, clearly outline the aims and expected outcomes. This clarity will guide efforts and prevent wasting resources on irrelevant data.

- Utilize Structured Templates: Templates that detail specific data points relevant to the research context simplify the extraction process. This organization streamlines information gathering and enhances accuracy.

- Leverage Technology: Whenever feasible, employ software solutions for extracting and managing data. These tools can automate redundant tasks, reduce error rates, and increase overall efficiency.

- Data Validation Steps: Implement measures that ensure accuracy and completeness in the data collected. Employ techniques such as cross-checking and validation rules to safeguard data integrity.

- Regular Training for Personnel: Providing regular training sessions for individuals involved in data extraction improves their proficiency in relevant tools and practices, fostering a culture of rigor within the team.

- Cross-Review Extracted Data: Engaging in peer reviews or consensus discussions regarding the extracted data enhances its credibility. This collaborative effort helps identify potential issues arising from individual interpretations or mistakes.

| Guideline | Description | Benefits |

| Clear Objectives | Establishing clear goals for data extraction | Focuses efforts and resources |

| Structured Templates | Using predefined forms for data collection | Increases efficiency and reduces errors |

| Leverage Technology | Employing software solutions | Automates tasks, improves data management |

| Data Validation | Ensuring accuracy through validation processes | Enhances data reliability |

| Regular Training | Continuously training staff on best practices | Improves staff proficiency in tools |

| Cross-Reviewing Data | Engaging in collaborative accuracy verification | Ensures enhanced data credibility |

By adhering to these guidelines, organizations can optimize their data extraction processes, ultimately leading to insightful conclusions and sound decision-making.

Common Pitfalls to Avoid in Data Extraction Processes

Navigating the landscape of data extraction processes can be challenging, but avoiding common pitfalls can pave the way for success. Here are several critical issues to be mindful of:

- Failing to Define Clear Goals: Without well-defined objectives, the range of data gathered can become unwieldy and irrelevant. This lack of focus can waste time and resources, leading to inaccuracies.

- Ignoring Data Quality Assurance: Relying on unverified data can lead to misleading conclusions. Whether through automated checks or manual oversight, ensuring quality should be a non-negotiable part of the extraction process.

- Lack of Standardization: Not using standardized extraction templates increases the risk of inconsistencies within datasets. Creating a unified approach guarantees that relevant data is consistently captured.

- Neglecting Data Validation: When data validation is overlooked, inaccuracies become hard to spot and quantifiably problematic. Regular checks against original sources are crucial for reliable outputs.

- Overlooking Security Measures: As data breaches become more prevalent, neglecting the security of sensitive information can lead to severe repercussions, highlighting the necessity for robust security protocols.

| Common Pitfall | Description | Consequences |

| Failing to Define Goals | Unclear objectives lead to irrelevant data | Wastes time, resources, and impacts accuracy |

| Ignoring Data Quality | Neglecting verification leads to poor conclusions | Compromises reliability |

| Lack of Standardization | Inconsistencies affect datasets | Reduces data clarity and usability |

| Neglecting Validation | Skipping checks causes erroneous data collection | Decreases trust in data |

| Overlooking Security | Inadequate protections pose risks | Potential legal breaches |

By strategically avoiding these pitfalls, organizations can enhance the efficacy and reliability of their data extraction processes, fortifying the foundations upon which conclusions are drawn.

Role of PICO in Data Extraction

The PICO framework Population, Intervention, Comparison, Outcome serves as a guide through the intricate landscape of data extraction, particularly for systematic reviews. By defining the essential components within a review, PICO allows researchers to hone in on the pertinent details required for rigorous analysis.

Understanding the PICO Framework for Data Extraction

Understanding the PICO framework enables researchers to construct more focused and effective data extraction templates. Each of the four components plays a significant role:

- Population: This defines who is being studied. By specifying characteristics like age and gender, researchers can ensure that only relevant studies corresponding to their defined population parameters are included.

- Intervention: What treatment or exposure is evaluated? Outlining the intervention specifies what data will be extracted concerning the clinical question, enhancing relevance and accuracy.

- Comparison: Identifying a control or alternative treatment further enriches the extraction process. The comparison data provides context for understanding the intervention’s effectiveness.

- Outcome: Clearly defined outcomes indicate what results matter to the research question. This component sharpens focus by guiding analysts on which data points should be prioritized during extraction.

| PICO Element | Description | Significance |

| Population | Defines the group under study | Ensures inclusion criteria align with objectives |

| Intervention | Specifies the treatment being investigated | Guides data extraction relevance |

| Comparison | Identifies control or alternative interventions | Enriches context for analysis |

| Outcome | Defines the results being measured | Sharpens focus during data extraction |

Through a structured approach, using the PICO framework allows for comprehensive and systematic data extraction, producing reliable insights directly tied to the research goals.

Utilizing PICO for Optimized Data Extraction Outcomes

Harnessing the PICO framework can significantly enhance data extraction outcomes. In pursuit of optimized results, consider these strategies:

- Clear Specification of PICO Elements: Researchers should meticulously define each PICO element during the review protocol. This clarity aligns data extraction efforts with overarching study objectives.

- Refining Search Strategies: PICO components guide the development of targeted searches that capture relevant studies. Generating keywords based on PICO ensures accuracy in retrieval, minimizing extraneous data.

- Adapting Extraction Templates: Design extraction templates to reflect PICO elements. Structuring data collection around these components fosters accurate classification of pertinent information.

- Feedback Loop for Iteration: Encourage feedback on how well the PICO framework delineates necessary data. This iterative process refines extraction protocols over time, promoting continual learning.

| PICO Strategy | Description | Benefits |

| Clear Specification | Clearly define each component | Aligns data extraction with research goals |

| Refined Search Strategies | Utilizing PICO to generate relevant keywords | Enhances search accuracy and reduces irrelevant results |

| Adapted Extraction Templates | Structuring templates around PICO elements | Fosters accurate and categorized data collection |

| Feedback Loop | Iterative process for refining protocols | Promotes continuous improvement |

Through these strategies, the integration of PICO enhances the efficiency and validity of systematic reviews, facilitating optimal data extraction outcomes.

Data Validation and Quality Control

Validation and quality control are indispensable when conducting data extraction. The integrity of extracted data not only impacts the reliability of conclusions but also determines the overall quality of analyses and findings. Thus, a focus on strong validation practices is paramount.

Strategies for Ensuring Data Accuracy

Adopting robust strategies for data validation and quality assurance reduces the risk of errors and enhances outcome reliability. The following methods contribute to this essential facet of data extraction:

- Establish Data Governance Policies: Developing meticulous policies defining data management roles, standards, and best practices secures consistency and accuracy in data handling.

- Data Validation Techniques:

- Field-Level Validation: Ensuring that individual data entries meet established criteria, such as acceptable formats.

- Cross-Field Validation: Confirming logical relationships between data fields. For instance, checking that commencement dates align chronologically with event dates can validate coherence.

- Monitoring Quality Metrics: Regular assessments of data quality through metrics like accuracy, completeness, and consistency empower organizations to detect issues proactively.

- Regular Audits and Reviews: Routine evaluations of datasets against predetermined standards safeguard against decay in data quality and maintain high performance.

- Training Employees on Best Practices: Comprehensive training programs enable individuals to adhere to effective data entry and processing guidelines, cultivating a culture of precision within the organization.

| Strategy | Description | Benefits |

| Data Governance Policies | Definition of roles and standards for data management | Increases consistency and accuracy |

| Validation Techniques | Procedures for verifying data integrity | Enhances the credibility of the extracted data |

| Quality Metrics Monitoring | Consistent tracking of data quality | Helps detect and address issues promptly |

| Regular Audits | Routine evaluations of datasets | Maintains data quality and relevance |

| Employee Training | Educating staff on best practices | Fosters accuracy and competence |

By implementing these strategies, organizations can cultivate a robust foundation for high-quality data extraction, generating analyses rooted in reliable data.

Importance of Risk of Bias Assessment in Data Extraction

The assessment of bias during data extraction is crucial in ensuring the overall quality and validity of reviews. Risk of bias assessments help ascertain whether conclusions are genuinely reflective of the evidence and not artifacts of methodological flaws. Below are key considerations regarding why this assessment is pivotal:

- Quality of Evidence: Evaluating the risk of bias contributes to an informed review of study quality, bolstering the legitimacy of conclusions. A heightened awareness of biases enhances the critical appraisal of gathered data.

- Data Validation and Quality Control: Considering bias is a form of quality control within data extraction. Addressing potential biases ensures the credibility of the outcomes derived from extracted data.

- Guiding Meta-analysis: In quantitative reviews, identifying studies with potential biases influences whether they should be included in aggregated analyses. An understanding of bias allows researchers to make informed decisions regarding study weighting.

- Transparency and Reproducibility: A rigorous bias assessment enhances transparency, allowing for future researchers to replicate studies based on well-documented methodologies and findings.

- Utilization of Standardized Tools: Standardized assessment tools such as RoB 2.0 improve rigor in bias evaluation, enhancing researchers’ capacities to extract and assess valid data.

| Importance | Description | Benefits |

| Quality of Evidence | Ensures systematic review conclusions are valid | Bolsters legitimacy of study outcomes |

| Data Validation Control | Evaluating biases safeguards data integrity | Enhances confidence in extracted conclusions |

| Guiding Meta-analysis | Identifying bias influences analysis choices | Improves the quality of aggregated findings |

| Transparency and Replicability | Enhances reproducibility of reviews | Supports scientific integrity |

| Utilization of Standardized Tools | Employing tools for effective bias assessments | Promotes consistency and thorough evaluations |

In conclusion, risk of bias assessment plays a fundamental role in enhancing the accuracy and reliability of data extracted during systematic reviews, promoting valid and credible findings.

Data Extraction Process in Systematic Reviews

The data extraction process in systematic reviews involves systematic and structured steps to facilitate thorough data organization. By following a well-defined workflow, researchers can ensure that all essential information is meticulously captured.

Step-by-Step Data Extraction Workflow

- Define Objectives and Key Questions: Establish the overarching research questions that the systematic review intends to address. This allows researchers to identify data points necessary for answering these questions.

- Select Data Extraction Framework: Determine the appropriate framework (e.g., PICO) to guide the data extraction process. This framework will standardize how data is collected across studies.

- Develop Data Extraction Tools: Create data extraction forms or templates clearly outlining the information to be extracted. This could encompass study characteristics, outcomes, and methodologies, ensuring comprehensive data collection.

- Pilot Testing: Test the data extraction tools on a small sample of studies to identify potential issues with data capture. Adjustments can be made before full implementation based on feedback from this pilot phase.

- Data Extraction by Reviewers: Assign data extraction tasks to different reviewers. By allowing independent extraction, inconsistencies can be identified and resolved collectively, enhancing data reliability.

- Data Comparison and Reconciliation: After extraction, data collected by different reviewers is compared. Discrepancies are reconciled to ensure that the final dataset is accurate and reliable.

- Organize Data into Evidence and Summary Tables: Once the extraction is finalized, data is organized into evidence tables, detailing findings for each study, and summary tables, providing overarching insights collected from the review.

| Step | Description | Importance |

| Define Objectives | Establishes clear research objectives | Guides data extraction efforts |

| Select Data Extraction Framework | Chooses appropriate guidelines | Promotes standardization |

| Develop Data Extraction Tools | Creates structured forms | Ensures comprehensive data collection |

| Pilot Testing | Tests extraction tools on samples | Identifies potential issues early |

| Data Extraction by Reviewers | Involves multiple reviewers for accuracy | Gathers varied perspectives, enhances reliability |

| Data Comparison and Reconciliation | Syncs collected data from various sources | Ensures accuracy and consistency |

| Organize into Tables | Summarizes key findings | Facilitates clear presentation and analysis |

This structured workflow is designed to streamline the data extraction process, ensuring that systematic reviews are comprehensive, accurate, and meaningful.

Examples of Evidence and Summary Tables

To illustrate the outcomes of the data extraction process effectively, researchers organize data into evidence and summary tables that enhance comparability and clarity. The following examples summarize data extracted from multiple studies, facilitating an overview of findings:

Evidence Table Example:

| Study | Population | Intervention | Comparison | Outcome | Results |

| Smith et al. (2020) | Adults with Hypertension | Drug A | Placebo | Blood Pressure Reduction | -5 mmHg (p<0.05) |

| Doe et al. (2021) | Elderly Patients | Drug B | Drug A | Mortality Rate | No Significant Difference |

Summary Table Example:

| Intervention | Total Studies | Average Effect Size | 95% CI | Number of Participants |

| Drug A | 5 | -4.2 mmHg | (-5, -3) | 1,000 |

| Drug B | 3 | -1.5 mmHg | (-2, -1) | 500 |

These tables encapsulate data succinctly and allow for the rapid synthesis of information, serving as effective tools in decision-making within systematic reviews.

Case Studies and Applications

The practical applications of data extraction methodologies underscore their significance across a variety of disciplines, particularly in areas such as healthcare, business valuation, and market analysis. They highlight the ways data extraction shapes outcomes and drives evidence-based decisions.

Real-World Applications of Chris Mercer’s Data Extraction Methodology

Chris Mercer’s methodologies, while primarily focused on quantitative marketability discount modeling, align closely with rigorous data extraction principles. His work showcases real-world applications across numerous appraisal settings:

- Quantitative Marketability Discount Model (QMDM): This model effectively draws on evidence from comparable market transactions to provide accurate appraisals in business valuation scenarios. The rigorous data extraction underpinning the QMDM allows for informed assessments of the relative marketability of minority interests.

- Estate and Gift Tax Cases: Mercer’s methodologies have successfully navigated the complexities of IRS taxation standards. His valuation practices utilize solid data extraction techniques to substantiate claims, demonstrating the reliability of his appraisals even under scrutiny.

- Market Analysis: By employing statistically sound data extraction methods, Mercer provides analyses that foster client decision-making in investment contexts. This informs strategies by identifying market shifts and potential opportunities, illustrating the economic relevance of proficient data extraction.

- Educational Outreach: Mercer’s teaching and publication efforts empower practitioners by elaborating on the principles of data extraction and analysis within the realm of marketability discounts. His dedication to expanding understanding ensures his methodologies continue to influence business valuation practices meaningfully.

| Application | Description | Impact |

| Quantitative Modeling | Using comparative market data for valuations | Informs precise appraisals of interests |

| Tax Compliance | Assistance in estate and gift tax scenarios | Enhances credibility in tax evaluations |

| Market Analysis | Data-driven insights for investment strategies | Supports informed decisions for stakeholders |

| Educational Initiatives | Teaching data extraction practices to professionals | Promotes widespread knowledge in valuation |

Through these applications, Chris Mercer’s methodologies demonstrate how effective data extraction not only influences academia but also impacts business valuation standards across various environments.

Lessons Learned from Case Studies in Data Extraction

The examination of case studies presents rich insights into the importance of data extraction processes across numerous sectors. Here are critical lessons learned that illuminate best practices and strategies for effective implementation:

- Importance of Data Quality: Organizations have encountered challenges stemming from poor data quality during extraction processes. Lessons learned emphasize rigorous validation during the extraction phase, advocating for deduplication, cleansing, and standardization to mitigate errors.

- Automation Yielding Better Efficiency: Companies that integrate automated data extraction solutions have significantly improved operational efficiency. Case studies highlight the reduction of manual entry errors and time spent on routine tasks, showcasing the valuable return on investement through tech adoption.

- Adaptability to Changing Data Sources: Organizations that maintain flexibility within data extraction strategies adapt more adeptly to evolving datasets. Many case studies reveal that scalability in extraction tools allowing adaptation to new formats and platforms is a critical practice for long-term success.

- Real-Time Data Processing: Companies recognize the necessity of extracting and analyzing data in real-time for responsive strategic decisions. Those that invest in immediate data extraction technologies gain a competitive advantage by offering timely insights.

- Regulatory Compliance: With data governance becoming increasingly paramount, organizations must prioritize conformity with regulations like GDPR. Case studies evidence that ensuring extraction methods align with legal standards is essential for long-term sustainability and reputation.

| Key Lesson | Description | Implications for Practice |

| Importance of Data Quality | Rigorous validation is key | Minimizes risks and ensures reliable outputs |

| Automation Efficiency | Tech adoption leads to higher productivity | Reduces manual errors, enhances operational efficacy |

| Adaptability | Tools need to accommodate evolving data sources | Promotes resilience in a changing data landscape |

| Real-Time Processing | Immediate extraction technologies support decision-making | Improves responsiveness and competitiveness |

| Regulatory Compliance | Proper alignment with laws safeguards information | Protects against potential legal repercussions |

These lessons reflect how organizations can navigate the complexities of data extraction effectively, ensuring robust practices that foster strong outcomes.

Future Trends in Data Extraction

As technology rapidly evolves, so do the methodologies used in data extraction. Anticipating these future trends is vital for organizations looking to remain competitive in an increasingly data-driven world. Here are key directions projected for the upcoming years:

Advancements in Automated Data Extraction

Automated data extraction continues to evolve, promising significant improvements in data management:

- Increased Use of Generative AI: Generative AI is forecasted to enhance automated data extraction through intuitive natural language processing capabilities that allow systems to process and understand context effectively, thus streamlining data interactions for users with varying expertise.

- Implementation of Augmented Intelligence: The trend towards augmented intelligence, where AI complements human decision-making, will create tools capable of managing routine data extraction while allowing analysts to focus on complex interpretations. This synergy will amplify productivity and minimize human error.

- Automation of Complex Data Handling: As organizations aim to free their workforce from heavy-lifting data extraction tasks, AI and ML are set to automate intricate processes that demand heavy resource tuning, optimizing efficiency without sacrificing accuracy.

- Enhanced Optical Character Recognition (OCR): Advancements in OCR technology will improve the extraction of text from scanned files and images, facilitating data capture from varied formats, thus improving workflow efficiency across business operations with disparate document types.

- Data Quality Improvement through Automation: Automation tools will increasingly integrate validation features during extraction, leading to greater data quality assurance. Such measures ensure extracted data maintain high quality from the outset, promoting better analytical outcomes.

| Trend | Description | Potential Benefits |

| Generative AI | Intuitive processing of natural language data | Simplifies data interactions for users |

| Augmented Intelligence | AI-enhanced tools managing extraction processes | Increases productivity and error reduction |

| Complex Data Automation | Automating labor-intensive data tasks | Frees up human resources for strategic focus |

| Enhanced OCR | Improved extraction from non-standard formats | Streamlines data input across various industries |

| Quality Improvement | Integrating validation during extraction | Elevates overall data reliability |

These anticipated advancements signal exciting new methods and tools that will reshape how organizations approach data extraction in pursuit of efficient, accurate data management.

Role of Artificial Intelligence in Enhancing Data Extraction Efficiency

The role of AI is proving to be transformative, enhancing various facets of data extraction:

- Pattern Recognition and Classification: AI algorithms effectively analyze large datasets, identifying patterns that assist in data categorization. This capability allows organizations to conduct nuanced analyses and reduce time spent on manual classification.

- Natural Language Processing: With advancements in NLP, AI systems will increasingly interpret and summarize unstructured data from sources like social media and emails. This technology expands the breadth of viable data, increasing the diversity available for extraction.

- Integration of Machine Learning Models: Responding to changing data landscapes, machine learning models can predict and adapt extraction needs. Organizations investing in ML technologies ensure their extraction methods remain cutting-edge and effective.

- Reduction of Human Error: Automating data extraction tasks significantly diminishes human error, a common pitfall in manual systems. Implementing AI tools increases trust in data quality, leading to more reliable decision-making processes.

- Enhanced Data Governance: AI technologies are also being employed to monitor data governance policies, ensuring that data handling procedures align with institutional standards while maintaining data accuracy and security.

| AI Role | Description | Benefits |

| Pattern Recognition | Analyzes patterns for data classification | Reduces manual classification workload |

| Natural Language Processing | Autonomously interprets and summarizes data | Broadens the scope of data extraction sources |

| Machine Learning | Adapts extraction methods based on new inputs | Maintains effective data handling capabilities |

| Reduction of Human Errors | Automates tasks reducing manual intervention | Increases the reliability of extracted data |

| Enhanced Governance | Monitors adherence to standards in data handling | Secures data quality and compliance |

Through the alignment of AI technologies within the data extraction sphere, organizations can foresee a future characterized by accuracy, efficiency, and innovation.

In conclusion, data extraction particularly as developed and utilized by Chris Mercer forms an essential pillar in the realm of research methodologies, enabling systematic reviews, improving business intelligence, and ultimately supporting sound decision-making across diverse fields. By understanding and implementing best practices, leveraging new technologies, and avoiding common pitfalls, practitioners can harness the full potential of data extraction processes to deliver reliable, valuable insights.

Frequently Asked Questions:

Business Model Innovation:

Embrace the concept of a legitimate business! Our strategy revolves around organizing group buys where participants collectively share the costs. The pooled funds are used to purchase popular courses, which we then offer to individuals with limited financial resources. While the authors of these courses might have concerns, our clients appreciate the affordability and accessibility we provide.

The Legal Landscape:

The legality of our activities is a gray area. Although we don’t have explicit permission from the course authors to resell the material, there’s a technical nuance involved. The course authors did not outline specific restrictions on resale when the courses were purchased. This legal nuance presents both an opportunity for us and a benefit for those seeking affordable access.

Quality Assurance: Addressing the Core Issue

When it comes to quality, purchasing a course directly from the sale page ensures that all materials and resources are identical to those obtained through traditional channels.

However, we set ourselves apart by offering more than just personal research and resale. It’s important to understand that we are not the official providers of these courses, which means that certain premium services are not included in our offering:

- There are no scheduled coaching calls or sessions with the author.

- Access to the author’s private Facebook group or web portal is not available.

- Membership in the author’s private forum is not included.

- There is no direct email support from the author or their team.

We operate independently with the aim of making courses more affordable by excluding the additional services offered through official channels. We greatly appreciate your understanding of our unique approach.

Be the first to review “Data Extraction with Chris Mercer” Cancel reply

You must be logged in to post a review.

Related products

Technology

Technology

Reviews

There are no reviews yet.