-

×

Soul Medicine with David Lion

1 × 319,00 $

Soul Medicine with David Lion

1 × 319,00 $ -

×

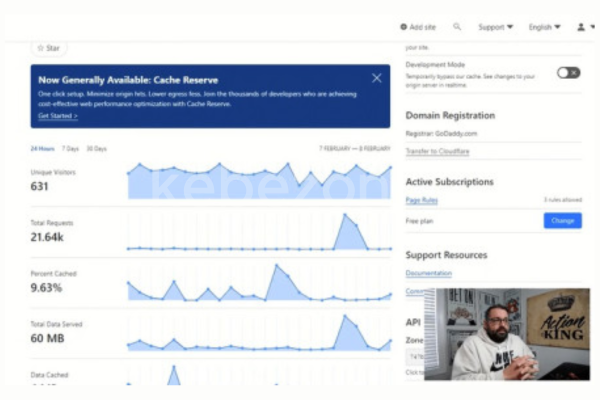

Build and Sell APIs – Establish a New Income Stream!

1 × 5,00 $

Build and Sell APIs – Establish a New Income Stream!

1 × 5,00 $ -

×

Working with the Masters Course with Chunyi Lin

1 × 46,00 $

Working with the Masters Course with Chunyi Lin

1 × 46,00 $

Intermediate Statistics with Milly kc

39,00 $

Download Intermediate Statistics with Milly kc, check content proof here:

Intermediate Statistics with Milly KC

Understanding statistics is akin to learning a new language; the more you practice, the more fluent you become. Intermediate statistics serves as a bridge, connecting the foundations of basic statistics with the intricate world of advanced data analysis. In this article, we will explore intermediate statistics as presented by Milly KC, emphasizing its significance in research, the application of statistical methods, and overcoming common challenges faced by learners.

Throughout the discussion, you will discover the importance of various concepts, such as descriptive statistics, probability theory, and their role in real-world applications. Let’s embark on this statistical journey to deepen our comprehension and application of the principles essential for effective data analysis, encapsulated in a cohesive and engaging manner.

Overview of Intermediate Statistics

Intermediate statistics delves into the nuanced aspects of data analysis that often go unnoticed in basic statistics. Just as a musician understands the significance of both melody and rhythm, statisticians must grasp both descriptive and inferential statistics to interpret data accurately. Descriptive statistics summarize and present data in a meaningful way, while inferential statistics allow researchers to make predictions and generalizations about a population based on sample data.

The complexity of human behavior and the diverse array of data types necessitate an understanding of statistical techniques that can adapt to various contexts and challenges. Milly KC’s work brings these elements together, fostering a sense of confidence and clarity among students venturing into the realm of statistics. With explanatory tables, practical applications, and case studies, her approach emphasizes the real-world impact of statistics on research and decision-making, making the subject matter not only educational but also engaging.

Key Concepts in Statistics

Key concepts serve as the structural components around which intermediate statistics revolve.

- Descriptive Statistics: Measures of central tendency such as mean, median, and mode provide insights into data distribution. They serve as the launching point for deeper analysis, indicating where the bulk of data lies.

- Probability Theory: This provides the theoretical foundation for making inferences from samples. Concepts like sample space, events, and conditional probability enable researchers to understand the likelihood of various outcomes, akin to forecasting the weather.

- Inferential Statistics: Equipped with techniques like hypothesis testing and confidence intervals, researchers can draw conclusions from their samples and infer about the broader population. This is crucial in many fields, including healthcare, social sciences, and market research.

- Measure Relationships: Understanding correlation and regression allows statisticians to explore relationships between two or more variables, further integrating probability theory with real-world applications.

- Statistical Software: Gaining proficiency in software tools like SPSS enhances data analysis skills, making statistical methods accessible and applicable in practice.

These key concepts validate the assertion that statistics is a powerful tool for understanding and transforming data into actionable insights.

Importance of Intermediate Statistics in Research

The importance of intermediate statistics in research cannot be overstated; it enables scholars and practitioners to derive meaningful conclusions from complex data sets. By applying statistical methods effectively, researchers can explore relationships, test hypotheses, and make significant contributions to their fields.

- Understanding Relationships: Intermediate statistics allows researchers to assess the strength and nature of relationships between multiple variables. For example, in medical studies, determining how lifestyle factors influence health outcomes is essential.

- Advanced Data Analysis Techniques: Statistical methods like ANOVA enable researchers to compare means across groups, revealing how various factors contribute to observable outcomes.

- Hypothesis Testing: Through rigorous hypothesis testing, researchers can make informed decisions based on evidence, ultimately driving ### advancements in knowledge. Utilizing t-tests or chi-square tests helps ascertain the significance of findings, enriching the research landscape.

- Modeling Relationships: Techniques such as linear regression help create models that describe how independent variables impact dependent ones. This modeling ability is indispensable in fields ranging from economics to psychology.

- Data Interpretation: The ability to interpret statistical results fosters insightful conclusions that can inform policy and drive further research.

In essence, intermediate statistics empowers researchers to navigate the complexities of data, providing them with the tools necessary to uncover hidden truths and foster meaningful advancements across various disciplines.

Descriptive Statistics

Descriptive statistics is the bedrock of statistical analysis, summarizing and presenting data in ways that enhance understanding. Just as an artist uses colors and shapes to create a visual narrative, descriptive statistics employs numerical measures and visual representations to form a coherent overview of a dataset.

Key Elements of Descriptive Statistics Include:

- Measures of Central Tendency: These statistics include the mean, median, and mode, each serving to identify the central point within a dataset. The mean offers a balanced perspective, while the median provides insight into data distribution, particularly in skewed datasets. The mode, highlighting the most frequent observation, reveals trends that averages may overlook.

- Measures of Variability: Measures such as range, variance, and standard deviation provide insight into the spread of data points, illustrating how individual observations diverge from central values. A high standard deviation signifies a wide spread, indicating diverse data points, while a low standard deviation suggests consistency among values.

- Data Visualization: Utilizing charts, graphs, and tables, descriptive statistics communicate complex information efficiently. Visual tools like histograms or scatter plots help convey trends and relationships, allowing for a swift comprehension of data.

Through careful application of descriptive statistics, researchers can distill large quantities of data into meaningful insights, paving the way for subsequent analyses and interpretations.

Measures of Central Tendency

Measures of central tendency are essential quantitative descriptors that summarize data, providing a glimpse into what’s “typical” within a dataset. Imagine standing in a crowd, trying to discern the average height; the measures of central tendency give you a clear understanding of where most people stand.

- Mean:

- Definition: The average of a dataset calculated by summing all data points and dividing by the count.

- Formula: ( ext{Mean} = rac{Sigma x_i}{n} )

- Example: For a dataset [2, 4, 6, 8], the mean is ( (2 + 4 + 6 + 8)/4 = 5 ).

- Median:

- Definition: The middle value in an ordered dataset. For even datasets, it is the average of the two middle values.

- Example: For [3, 5, 7], the median is 5; for [2, 4, 6, 8], it is ( (4 + 6)/2 = 5 ).

- Mode:

- Definition: The most frequently occurring value(s) in a dataset. A dataset may have multiple modes or none at all.

- Example: In [1, 2, 2, 3, 4], the mode is 2. In [1, 1, 2, 2, 3], it has two modes: 1 and 2.

Utilizing these measures allows researchers to summarize their data succinctly, providing clarity in their analyses and insights that can drive informed decision-making.

Measures of Dispersion

While measures of central tendency provide valuable insights, they alone do not tell the full story of data variability. Measures of dispersion reveal how spread out the values are, fostering a more comprehensive understanding of dataset characteristics.

- Range:

- Definition: The difference between the maximum and minimum values.

- Formula: ( ext{Range} = ext{Max}(x) – ext{Min}(x) )

- Example: For [1, 2, 3, 4, 10], the range is ( 10 – 1 = 9 ).

- Variance:

- Definition: The average of the squared differences from the mean, giving a measure of how far each number in the set is from the mean.

- Example: For [1, 2, 3], with a mean of 2, variance is calculated as ( rac{(1-2)^2 + (2-2)^2 + (3-2)^2}{3} = rac{2}{3} approx 0.67 ).

- Standard Deviation:

- Definition: The square root of variance, indicating how much individual data points deviate from the mean.

- Example: Continuing the previous example, the standard deviation is ( sqrt{0.67} approx 0.82 ).

- Interquartile Range (IQR):

- Definition: The difference between the first quartile (Q1) and the third quartile (Q3), measuring the spread of the middle 50% of data.

- Example: For [1, 2, 3, 4, 5, 6, 7, 8], where Q1 is 2.5 and Q3 is 6.5, IQR is ( 6.5 – 2.5 = 4 ).

Understanding measures of dispersion is essential for interpreting data more effectively, as they highlight the variability within datasets, guiding conclusions drawn from statistical analyses.

Application in Data Analysis

The application of intermediate statistics in data analysis is akin to carving out a sculpture from a block of marble; it takes careful planning, technique, and insight to reveal the masterpiece within the raw material. Effective data analysis involves several key processes that are enhanced by intermediate statistics.

- Data Cleaning: Before analysis, ensuring the integrity of data is paramount. Intermediate statistics provide methods for identifying and handling missing data, outliers, and inconsistencies that could compromise results.

- Enhancing Interpretation: By employing descriptive statistics, analysts can craft compelling narratives from data. They formulate hypotheses and questions that drive exploration and analysis, lending deeper meaning to findings.

- Identifying Trends: Intermediate statistics allow for robust trend analysis particularly useful in business and finance. Patterns and correlations can be established, informing strategic decision-making in market analysis or resource allocation.

- Statistical Tests: Applying tests such as t-tests, ANOVA, and regression analysis illuminates relationships between variables and helps confirm or reject hypotheses. This fosters informed conclusions that shape research developments and practical implementations.

- Real-World Data Application: From healthcare to marketing, applying intermediate statistics transforms data into actionable insights. In healthcare, for instance, it can identify risk factors for diseases, enhancing preventive measures and treatments.

As a result, the application of intermediate statistics not only elevates understanding but also drives innovation and efficiency within various sectors.

Probability Theory

The realm of probability theory serves as the beating heart of statistics, breathing life into data analysis by enabling statisticians to assess the likelihood of outcomes and make informed predictions. Just as the course of a river can be predicted based on the landscape it traverses, probability theory equips researchers with the tools to navigate uncertain outcomes.

Fundamental Probability Concepts:

- Basic Probability Definitions: Probability quantifies the likelihood of an event occurring. It is represented by a number between 0 (impossibility) and 1 (certainty). For example, flipping a fair coin has a probability of 0.5 for either heads or tails.

- Sample Space and Events: The sample space (S) is the complete set of all possible outcomes of an experiment (e.g., rolling a die). Each event is a specific outcome or combination of outcomes within this space.

- Calculating Probability: Quantifying probabilities involves the ratio of favorable outcomes to total possible outcomes. For example, in a standard deck of cards, the probability of drawing an Ace is ( P(Ace) = rac{4}{52} ).

- Conditional Probability: This calculates the probability of an event (A) given the occurrence of another event (B), expressed as ( P(A|B) ). This concept is vital for understanding interdependent events.

- Independence of Events: Events are independent if the occurrence of one does not influence the likelihood of the other, denoted by ( P(A ext{ and } B) = P(A) imes P(B) ).

- Bayes’ Theorem: Bayes’ theorem facilitates the updating of probabilities based on new evidence. This theorem is expressed as ( P(A|B) = rac{P(B|A) cdot P(A)}{P(B)} ), demonstrating how probabilities can change with the introduction of new information.

These foundational concepts illuminate how probability theory intricately intertwines with statistical methodologies, enabling researchers to make predictions and better understand the likelihood of various phenomena.

Fundamental Probability Concepts

Understanding fundamental probability concepts forms the backbone of effective statistical analysis. Here are the core elements:

- Basic Probability Definitions: Probability, expressed as a value from 0 to 1, quantifies how likely an event is to occur. For instance, rolling a fair die yields a probability of 1/6 for each face.

- Sample Space and Events: The sample space (S) encompasses all possible outcomes of an experiment. For a coin flip, the sample space is {Heads, Tails}. Each event is a subset of this sample space.

- Calculating Probability: The calculation of an event’s probability is expressed through the formula: [ P(A) = rac{ ext{Number of favorable outcomes for A}}{ ext{Total number of possible outcomes}} ] This formulation applies to random experiments with equally likely outcomes (e.g., drawing a card from a deck).

- Conditional Probability: Conditional probability assesses the likelihood of an event A given that event B has occurred: [ P(A|B) = rac{P(A ext{ and } B)}{P(B)} ] This highlights how a prior event can influence probabilities of subsequent events.

- Independence of Events: Two events A and B are deemed independent if the occurrence of one event does not impact the other. The probability of both events occurring is reflected in: [ P(A ext{ and } B) = P(A) imes P(B) ]

- Bayes’ Theorem: This theorem facilitates the updating of probabilities based on new evidence: [ P(A|B) = rac{P(B|A) cdot P(A)}{P(B)} ] It plays a crucial role in decision-making under uncertainty.

In sum, these fundamental concepts enable researchers to evaluate uncertainties expertly and lay the groundwork for more advanced statistical methodologies.

Conditional Probability

Conditional probability is pivotal in understanding the relationship between events in statistical contexts. By analyzing how the occurrence of one event influences another, researchers can deepen their insights into complex scenarios, akin to tracing the ripple effects of a stone dropped in water.

- Definition: Conditional probability refers to the probability of an event A given that another event B has occurred, expressed as ( P(A|B) ).

- Calculation: The formula for calculating conditional probability is: [ P(A|B) = rac{P(A ext{ and } B)}{P(B)} ] This formulation highlights the relationship between the two events and emphasizes how knowing the outcome of one can change the evaluation of the other.

- Example: Suppose there is a bag containing 3 red and 2 blue balls. If we draw a ball and know that it’s red, the probability of drawing another red ball is determined by the revised sample space. The calculation would be: [ P( ext{Red second} | ext{Red first}) = rac{2}{4} = 0.5 ]

- Importance: The concept of conditional probability is essential in fields such as epidemiology, risk assessment, and finance, where the understanding of relationships between events can significantly enhance decision-making processes.

- Applications: Conditional probability often comes into play when determining risks and probabilities in various fields, such as predicting the likelihood of developing a health condition based on exposure to a specific risk factor.

Mastering conditional probability entwines analysis with practical implications, allowing researchers to navigate complex interdependencies among events deftly.

Applications in Inferential Statistics

Inferential statistics is critical in making predictions and generalizations about populations based on sample data. Just as an artist infers the essence of a subject through interpretation and representation, inferential statistics empowers researchers to draw conclusions that extend beyond the immediate findings of their samples.

- Hypothesis Testing: Researchers use inferential statistics to formulate and test hypotheses about the characteristics of populations. By employing tests such as t-tests and ANOVA, they can determine whether observed differences across groups are statistically significant.

- Confidence Intervals: These provide an estimated range of values within which the true population parameter is likely to lie. For example, if researchers discover that a sample mean falls between 45 and 55 at a 95% confidence level, they can infer that the population mean lies within this range.

- Predictive Modeling: Inferential statistics incorporates regression analysis to create predictive models. By analyzing relationships between variables, researchers can forecast trends and behaviors, such as predicting sales growth based on advertising expenditure.

- Sampling Techniques: Selecting appropriate sampling methods (e.g., random, stratified) is vital in ensuring that a representative sample captures the population’s characteristics effectively. Good sampling minimizes bias and maximizes the validity of conclusions drawn.

- Real-World Implications: Whether informing public policy, enhancing marketing strategies, or advancing scientific research, the applications of inferential statistics underscore the importance of making data-driven decisions in a myriad of contexts.

In sum, inferential statistics serves as an essential tool for navigating the complexities of research, enabling practitioners to derive insightful conclusions from their analyses and influencing future directions in various fields.

Measuring Relationships

Effectively measuring relationships between variables is vital in statistics, enabling researchers to uncover the intricacies of data and understand how different factors interact. It is akin to constructing a bridge, connecting disparate points of data to illustrate correlations or causative influences.

Correlation vs. Causation

- Correlation:

- Definition: Correlation refers to the statistical relationship between two variables, illustrating how changes in one variable may consistently accompany changes in another.

- Types: Correlation can be positive (both variables increase together) or negative (one variable increases while the other decreases).

- Coefficient: The strength of correlation is quantified by the correlation coefficient (r), which ranges from -1 (perfect negative) through 0 (no correlation) to +1 (perfect positive).

- Causation:

- Definition: Causation implies that one variable directly influences the behavior of another.

- Experimental Evidence: Establishing causation requires controlled experiments and more extensive evidence than correlation alone provides.

- Confounding Variables: Often, the relationship detected between two variables is influenced by a third variable, known as a confounder, complicating causal assertions.

- Example: A classic example is the correlation between ice cream sales and temperatures; both rise in summer. However, while there may be a positive correlation, causation is not established, as increased summer temperatures do not cause increased ice cream sales directly; rather, both are influenced by the seasonal weather.

- Statistical Techniques: Advanced statistical methods, such as path analysis and structural equation modeling, help clarify the relationships among variables. These techniques consider both direct effects (causal) and indirect effects (mediated through other variables) to elucidate complex interactions.

- Conclusion: Understanding the nuances of correlation and causation equips researchers with the analytical tools necessary for making informed decisions and advancing their fields. By recognizing the interplay between different variables, they can develop more accurate models and interpretations that drive meaningful insights.

Linear Regression Analysis

Linear regression analysis stands as a fundamental technique for examining the relationships between variables, quantifying how changes in one or more independent variables predict changes in a dependent variable. It’s the framework through which researchers can uncover patterns and establish relationships, akin to drawing a line through a scatterplot of data points to reveal trends.

- Definition: Linear regression models the relationship between a dependent variable ( Y ) and one or more independent variables ( X ) through a linear equation: [ Y = a + bX + e ] where ( a ) is the y-intercept, ( b ) is the slope, and ( e ) represents the error term.

- Model Fitting: The model is fitted using methods such as ordinary least squares (OLS), minimizing the sum of the squared differences between observed values and predicted values.

- Assumptions: Linear regression relies on several assumptions, including linearity (the relationship between variables is linear), independence (observations are independent), homoscedasticity (constant variance of errors), and normality of residuals (errors are normally distributed).

- Interpreting Coefficients: The coefficients ( a ) and ( b ) represent the contribution of independent variable(s) to the predicted outcome. For instance, if the independent variable is hours studied and the dependent variable is exam score, a positive ( b ) would indicate that increased study hours potentially lead to higher exam scores.

- Applications: Linear regression is widely used in various fields, including psychology (predicting behavioral outcomes), finance (forecasting stock prices), and marketing (evaluating the effectiveness of advertising campaigns).

By leveraging linear regression analysis, researchers can unlock the predictive power of their data, making informed decisions rooted in statistical evidence.

Logistic Regression Techniques

Logistic regression is a specialized statistical method used for predicting the outcome of a categorical dependent variable based on one or more independent variables. Unlike linear regression, which supports continuous outcomes, logistic regression is tailored for scenarios where the dependent variable is binary or multinomial.

- Definition: Logistic regression models the log odds of the probability of the outcome occurring. The logistic function ensures that predicted probabilities remain between 0 and 1: [ p = rac{1}{1 + e^{-z}} ] where ( z ) is a linear combination of independent variables.

- Types:

- Binary Logistic Regression: For a dichotomous outcome, such as yes/no or success/failure.

- Multinomial Logistic Regression: For outcomes with more than two categories, allowing comparison across multiple classes.

- Interpretation of Results: Coefficients obtained in logistic regression represent the change in log odds. When exponentiated, these coefficients yield odds ratios, offering a comprehensible summary of how changes in independent variables influence the probability of the outcome.

- Model Evaluation: Evaluation of logistic regression models relies on metrics such as accuracy, confusion matrix, and area under the ROC curve (AUC). These tools help assess model performance and refine predictions.

- Applications: Logistic regression is extensively used in fields such as healthcare (predicting the presence of diseases), marketing (evaluating customer response to campaigns), and social sciences (analyzing survey data on binary scales).

By effectively applying logistic regression techniques, researchers gain valuable insights into categorical data, dictating informed approaches to decision-making across various disciplines.

Advanced Statistical Methods

Advanced statistical methods help tackle complex data sets and uncover patterns that may not be apparent using simpler techniques. These methods typically extend foundational concepts in statistics to explore relationships among multiple variables and allow for in-depth analyses.

- Nonparametric Statistics: Unlike parametric tests that make specific assumptions about data distributions (normality), nonparametric methods do not require these assumptions, making them suitable for analyzing ordinal or non-normally distributed data. Examples include the Wilcoxon test and Kruskal-Wallis test.

- ANOVA Techniques: Analysis of Variance (ANOVA) tests assess differences in means among three or more groups. It identifies whether any statistically significant differences exist, but follow-up tests are necessary to pinpoint where these differences lie.

- Multinomial Logistic Regression: This technique extends logistic regression to handle categorical outcomes with more than two classes. By estimating separate models for each class versus a reference category, it effectively captures complex relationships in multi-class scenarios.

- Structural Equation Modeling (SEM): SEM allows researchers to examine relationships between observed and latent variables. It provides a comprehensive framework for evaluating complex relationships while accommodating measurement error.

- Bayesian Statistics: This approach utilizes Bayes’ theorem for updating probability estimates as new information becomes available. Bayesian methods are particularly advantageous in decision-making processes and predictive analytics.

Each of these statistical methods serves to enhance the analytical capabilities of researchers, allowing for a comprehensive exploration of data to deduce meaningful conclusions and drive evidence-based decision-making.

Nonparametric Statistics

Nonparametric statistics provide flexible alternatives to traditional parametric analyses when assumptions about data distributions are violated. As the name suggests, these methods do not rely on parameters tied to specific population distributions, opening the door for analyses across various scenarios.

- Kruskal-Wallis Test: A nonparametric version of ANOVA, the Kruskal-Wallis test evaluates whether samples from different populations originate from the same distribution. It ranks all observations and tests for differences in rank sums.

- Mann-Whitney U Test: This test compares two independent samples to determine whether their underlying distributions differ. Rather than relying on sample means, it evaluates the ranks of the data.

- Wilcoxon Signed-Rank Test: Suitable for paired samples, this test assesses differences between paired observations, making it ideal for before-and-after assessments.

- Applicability in Medical Research: Nonparametric methods are especially important in medical settings, where data often do not adhere to normal distributions. For instance, analyzing ranked pain scores from clinical trials may employ the Mann-Whitney U test, efficiently comparing treatment effects.

- Rigorous Data Analysis: Armed with nonparametric methodologies, researchers can effectively analyze datasets characterized by skewed distributions or ordinal scales, ensuring that results remain robust and reliable.

By integrating nonparametric techniques into statistical analyses, researchers enrich their toolbox, adapting methodologies to meet the diverse characteristics that datasets may embody.

ANOVA Techniques

ANOVA (Analysis of Variance) remains a cornerstone of statistical methods enabling researchers to compare means across multiple groups. It is the key to unraveling the complexities of experimental data, akin to conducting an orchestra by harmonizing various instruments.

- One-Way ANOVA: This basic form of ANOVA assesses the impact of a single factor on a dependent variable. For example, examining whether different diets lead to varying levels of weight loss across groups would involve a one-way ANOVA.

- Two-Way ANOVA: As the name suggests, this technique evaluates the influence of two independent variables simultaneously, examining both main effects and interactions. It can provide deeper insights into how various factors influence outcomes.

- Post Hoc Tests: Following ANOVA, which merely indicates the existence of differences, post hoc tests (e.g., Tukey’s HSD) identify precisely where differences lie among multiple groups. This is crucial for interpreting ANOVA results meaningfully.

- Robustness: ANOVA techniques assume normality and homogeneity of variance, yet nonparametric alternatives such as the Kruskal-Wallis test can be employed when these assumptions are violated.

- Applications across Disciplines: ANOVA techniques find applications in numerous fields, including psychology (assessing group therapy effects), education (evaluating teaching methods), and agriculture (yield comparisons across different fertilizers), among others.

By leveraging ANOVA techniques, researchers can dissect complexities inherent in their data, leading to substantial insights and informing critical decision-making processes.

Multinomial Logistic Regression

Multinomial logistic regression emerges as a robust methodology for analyzing categorical outcomes with multiple classes. It stands apart from its binary counterpart, allowing researchers to explore rich datasets characterized by more than two possible outcomes.

- Model Specification: In multinomial logistic regression, one category is typically designated as the reference category, and the model estimates the likelihood of each of the other classes relative to this reference.

- Multiple Classes: Researchers can distinguish among the various classes based on independent variables, revealing complex interactions and providing clear interpretations of results.

- Software Implementation: Multinomial logistic regression is implemented in various statistical packages, including SPSS and R. The modeling commands enable users to specify dependent and independent variables efficiently.

- Interpretation of Results: The output of multinomial logistic regression provides coefficients for each category, which can be transformed into odds ratios for easy interpretation. This denotation allows practitioners to grasp the influence of predictor variables within the context of categorical outcomes.

- Applications in Diverse Fields: Multinomial logistic regression finds use across multiple disciplines, from predicting consumer behavior in marketing to analyzing survey responses in social research. Its capability to articulate complex relationships makes it a favored tool for statistical analysis.

By harnessing the power of multinomial logistic regression, researchers can unravel the complexities of categorical data, yielding insights that guide decision-making in multifaceted scenarios.

Statistical Software Utilization

The application of statistical software has transformed the landscape of data analysis, providing users with powerful tools to apply intermediate statistics techniques effectively. In the same way that artists utilize brushes and canvases to create compelling visuals, statisticians depend on software to distill insights from raw datasets.

- SPSS: Widely recognized for its user-friendly interface, SPSS provides tools for conducting a variety of statistical analyses ranging from descriptive statistics to advanced modeling techniques, including regression and ANOVA.

- R: Known for its flexibility and depth, R is a programming language that allows for custom analyses and complex visualizations. It caters to users seeking to implement unique statistical models or conduct extensive data management.

- Python: Utilizing libraries such as Pandas, NumPy, and StatsModels, Python is increasingly becoming a go-to tool for data analysis and statistical modeling. Its integration with machine learning expands its functional scope, making it invaluable in predictive analytics.

- Excel: While often considered a basic tool, Excel makes considerable strides in executing fundamental statistical analyses. It provides functions for calculations, data visualization, and basic regression analysis.

- Access to Resources: Numerous tutorials, guides, and online communities offer support for users as they navigate the intricacies of statistical software. This collaborative environment fosters improved learning and application of statistical techniques.

In summary, the utilization of statistical software is imperative for the modern statistician. Proficiency in these tools allows users to execute analyses seamlessly, moving from raw data to actionable insights.

Using SPSS for Statistical Analysis

SPSS (Statistical Package for the Social Sciences) presents a versatile platform for performing a wide range of statistical analyses. Researchers often find it beneficial due to its user-friendly interface, which streamlines the statistical analysis process.

- Data Entry and Management: SPSS facilitates the importation and management of datasets in various formats, enabling users to clean and prepare data efficiently for analysis.

- Analysis Commands: With built-in functionalities, SPSS empowers users to execute diverse statistical tests, including t-tests, ANOVA, linear regression, and chi-square tests with minimal coding required.

- Output Interpretation: SPSS presents results in clear output tables, making it easier for researchers to interpret findings. Each table provides a structured format, showcasing key statistics, p-values, and confidence intervals.

- Visualizations: The software’s capabilities extend to creating comprehensive visualizations, including histograms, bar charts, and scatter plots, which enhance the communication of results through graphical representation.

- Automating Procedures: SPSS allows for the automation of repetitive tasks through syntax, enhancing efficiency when conducting large-scale analyses.

By leveraging SPSS for statistical analysis, researchers can enhance their productivity, yielding robust insights efficiently and effectively.

Interpreting SPSS Output

The ability to interpret SPSS output is crucial for understanding and drawing conclusions from statistical analyses effectively. Navigating output tables can initially seem daunting, much like deciphering a complex narrative in a book.

- Understanding Tables: SPSS outputs results in tabular form, breaking down statistics into digestible bits. Familiarizing oneself with common tables such as those displaying descriptive statistics, test results, and coefficients is paramount.

- Key Sections:

- Descriptive Statistics: Briefly summarizing means and standard deviations provides insight into the dataset’s characteristics.

- ANOVA Table: Here, key values such as the F-statistic and p-value reveal whether group means differ significantly.

- Regression Coefficients: Within regression outputs, coefficients indicate the magnitude and direction of relationships between independent and dependent variables.

- Confidence Intervals and Significance Testing: Understanding confidence intervals illuminates the range of potential values for population parameters, while p-values indicate the significance of results, helping researchers discern statistical relevance.

- Visual Relevance: SPSS may provide graphical representations of analyses, offering supplementary insights through visual comparison and trends.

- Practice and Interpretation: Engaging with practice datasets and interpretation exercises encourages fluency in navigating SPSS outputs, ultimately supporting informed decision-making and reporting.

By mastering the interpretation of SPSS output, researchers cultivate their analytical prowess, allowing them to extract valuable insights from their statistical analyses aptly.

Practical Exercises in SPSS

Practical exercises are critical for solidifying understanding of statistical concepts and their application in SPSS. Engaging with hands-on exercises nurtures a deeper connection between theory and practice, empowering researchers to wield statistical techniques with confidence.

- Sample Datasets: Numerous online resources and textbooks, such as Milly KC’s Intermediate Statistics, offer sample datasets specifically designed for practicing statistical techniques in SPSS.

- Step-by-Step Instructions: Guides that provide step-by-step instructions for conducting specific analyses like conducting regression analyses or ANOVA enhance learning by allowing users to replicate analyses independently.

- Interpretation Challenges: Practical exercises that challenge users to interpret SPSS outputs will hone skills in identifying key statistics and drawing meaningful conclusions from the results.

- Case Studies: Engaging with real-world case studies empowers users to apply statistical concepts directly to relevant scenarios, fostering an understanding of their implications across various fields.

- Collaboration Opportunities: Group exercises or collaborative studies create opportunities for peer learning, enabling individuals to exchange knowledge and approaches to statistical analysis in SPSS.

By actively participating in practical exercises in SPSS, researchers foster proficiency and confidence in applying statistical methods, thereby equipping themselves to tackle real-world data challenges effectively.

Practical Applications of Intermediate Statistics

Intermediate statistics enriches not only academic knowledge but also practical applications across diverse fields, ensuring that statistical insights inform various sectors. The implications of these applications demonstrate the relevance and necessity of statistical literacy in today’s data-driven society.

Key Applications Include:

- Case Studies in Various Disciplines: Intermediate statistics empowers practitioners to conduct comprehensive analyses and interpret results across disciplines, from health sciences to marketing. Case studies allow for the illustration of statistical applications in real-world contexts, enhancing understanding and application.

- Statistical Methods in Industry: Businesses leverage intermediate statistics to analyze consumer behavior, financial trends, and market dynamics. By utilizing statistical techniques such as regression and time series analysis, organizations informedly shape their strategies and operational decisions.

- Impact on Decision Making: The application of intermediate statistics optimizes decision-making processes across various sectors. In healthcare, for example, statistical analyses can assist in understanding treatment effectiveness, guiding policy formulation and patient care practices.

- Education and Training: Educational institutions utilize intermediate statistics to ensure that students are equipped with analytical skills necessary for professional success. Incorporating practical statistical training into curricula fosters a generation of data-literate graduates ready to engage in evidence-based practices.

- Social Research: The social sciences employ intermediate statistical techniques to evaluate policies, study social phenomena, and inform public discourse. By analyzing survey data and conducting experimental studies, researchers can assess societal trends and the efficacy of interventions.

The versatile applications of intermediate statistics continue to permeate various domains, underscoring its importance in modern research and practice.

Challenges in Intermediate Statistics

Despite its significance, the pursuit of understanding intermediate statistics comes with an array of challenges. As learners navigate this intricate landscape, they may encounter hurdles that impede comprehension and application.

- Conceptual Complexity: Intermediate statistics delve deeper into sophisticated statistical methods, posing a challenge for some learners who may struggle to grasp advanced principles. Establishing a strong foundation in basic statistics is crucial before venturing into intermediate topics.

- Data Quality and Integrity: Ensuring data quality can be daunting, as issues such as missing values and biases can distort statistical analyses. Careful data cleansing and management are essential to bolster accuracy and validity.

- Interpreting Results: Many learners face difficulties in interpreting statistical output, particularly when confronted with p-values and confidence intervals. Misinterpretation can lead to erroneous conclusions, underscoring the necessity for proper statistical literacy.

- Statistical Software Proficiency: The adventure of mastering statistical software tools, such as SPSS and R, is often fraught with a steep learning curve. Engaging in structured training sessions can alleviate this barrier, helping users become competent in executing analyses.

- Staying Current: The field of statistics constantly evolves, introducing new methods, technologies, and best practices. Researchers must cultivate a commitment to continuous learning to remain abreast of developments relevant to intermediate statistics.

By recognizing and addressing these challenges, learners can foster resilience and develop a deeper understanding of intermediate statistics.

Common Misunderstandings

Common misunderstandings surrounding intermediate statistics often stem from complexities intrinsic to the subject. Here are significant errors and tips for overcoming them effectively.

- Mistaking Correlation for Causation: A prevalent misconception is treating correlated variables as causative. Understanding that correlation does not imply causation stresses the need for rigorous analysis to determine underlying causes.

- Overreliance on p-values: Many researchers equate statistical significance with practical significance by focusing solely on p-values. A well-rounded approach requires consideration of effect sizes and confidence intervals to contextualize findings.

- Misapplying Statistical Tests: Usage of inappropriate statistical tests can lead to invalid conclusions. Familiarity with various tests and their assumptions is essential for selecting the suitable method for analysis.

- Underestimating Sample Size: Many overlook the significance of sufficient sample sizes, which can lead to unreliable results. Statisticians should be mindful of statistical power when planning studies.

- Neglecting the Importance of Context: Data interpretation without context can result in misleading conclusions. Researchers should consider the broader implications of their findings and engage in critical thinking regarding their analyses.

By identifying these misunderstandings and actively addressing them, learners enhance their statistical literacy, paving the way for improved applications and analyses.

Tips for Overcoming Challenges

Overcoming challenges in intermediate statistics is a path paved by commitment, practice, and the strategic application of techniques. Here are some effective tips to navigate potential roadblocks.

- Strengthen Foundational Knowledge: Building a strong foundation in basic statistical concepts is vital before embracing the complexities of intermediate statistics.

- Engage in Practical Exercises: Regularly practicing statistical techniques using real-world datasets enhances understanding and boosts confidence in applying methods.

- Utilize Resource Materials: Leveraging textbooks, online tutorials, and courses can bolster comprehension and provide additional perspectives on statistical concepts.

- Participate in Study Groups: Collaborating with peers fosters engagement and facilitates collective learning by allowing individuals to discuss concepts and share insights.

- Seek Mentor Assistance: Mentorship from experienced professionals can guide learners through challenging topics while fostering deeper understanding and application.

- Continuous Learning: Embrace lifelong learning to stay updated on new scientific discoveries, techniques, and software updates related to statistics.

By adopting these strategies, learners develop resilience and confidence, enhancing their ability to navigate the transition from fundamental to intermediate statistics successfully.

Future Trends in Statistical Analysis

Looking ahead, the realm of statistical analysis is poised for transformative developments that will reshape how researchers engage with data. Here are key trends influencing the future of statistical analysis:

- Integration of Machine Learning: As machine learning algorithms become increasingly accessible, their integration into statistical analyses will enhance predictive capabilities while accommodating complex datasets more efficiently.

- Advancements in Software Tools: Ongoing improvements in statistical software such as SPSS, R, and Python will broaden functionalities, making sophisticated statistical techniques more user-friendly and adaptable.

- Big Data Analytics: The growing prevalence of big data necessitates the development and adoption of new statistical methods tailored to handle large, dynamic datasets. These methods will enhance insights and drive informed decision-making.

- Real-Time Data Analysis: Advances in data collection technology will enable real-time statistical analyses, facilitating immediate feedback and responses. This trend will revolutionize fields like healthcare and marketing by providing timely insights.

- Focus on Interpretation Over Calculation: The burgeoning emphasis on data storytelling and interpretation signifies a shift from purely statistical calculation to clearer narrative-driven interpretations, enhancing the communicative impact of statistical results.

By remaining attuned to these trends, statistics practitioners can effectively adapt to emerging methodologies and harness the full potential of statistical analysis in an evolving landscape.

In conclusion, intermediate statistics embodies a rich tapestry of concepts, methods, and applications that span across disciplines and influence decision-making processes in profound ways. Understanding techniques such as descriptive statistics, probability theory, and regression analysis equips researchers with the tools they need to extract meaningful insights from data. As we navigate the challenges of learning and applying intermediate statistics, fostering strong foundational knowledge, engaging in practical exercises, and staying current with advancements will empower individuals to succeed in this vital field. Ultimately, the combination of statistical rigor and creativity will pave the way for impactful research and evidence-based practices that enhance our understanding of the complex world we inhabit.

Frequently Asked Questions:

Business Model Innovation:

Embrace the concept of a legitimate business! Our strategy revolves around organizing group buys where participants collectively share the costs. The pooled funds are used to purchase popular courses, which we then offer to individuals with limited financial resources. While the authors of these courses might have concerns, our clients appreciate the affordability and accessibility we provide.

The Legal Landscape:

The legality of our activities is a gray area. Although we don’t have explicit permission from the course authors to resell the material, there’s a technical nuance involved. The course authors did not outline specific restrictions on resale when the courses were purchased. This legal nuance presents both an opportunity for us and a benefit for those seeking affordable access.

Quality Assurance: Addressing the Core Issue

When it comes to quality, purchasing a course directly from the sale page ensures that all materials and resources are identical to those obtained through traditional channels.

However, we set ourselves apart by offering more than just personal research and resale. It’s important to understand that we are not the official providers of these courses, which means that certain premium services are not included in our offering:

- There are no scheduled coaching calls or sessions with the author.

- Access to the author’s private Facebook group or web portal is not available.

- Membership in the author’s private forum is not included.

- There is no direct email support from the author or their team.

We operate independently with the aim of making courses more affordable by excluding the additional services offered through official channels. We greatly appreciate your understanding of our unique approach.

Be the first to review “Intermediate Statistics with Milly kc” Cancel reply

You must be logged in to post a review.

Reviews

There are no reviews yet.