Statistics Fundamentals for Testing with Ben Labay

39,00 $

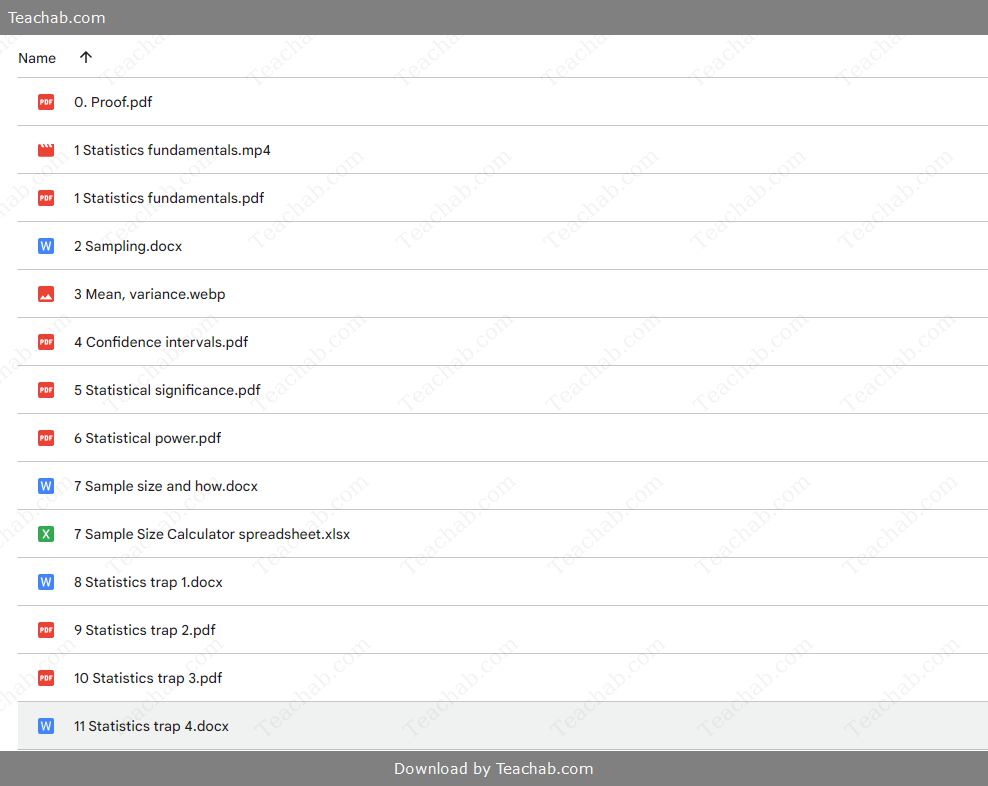

You may check content proof of “Statistics Fundamentals for Testing with Ben Labay” below:

Statistics Fundamentals for Testing by Ben Labay

Statistics can seem daunting, yet it holds the key to making informed decisions based on data rather than guesswork. In our modern world, where information is abundant and accessible, the need to effectively interpret and utilize statistics is more critical than ever. Ben Labay’s course “Statistics Fundamentals for Testing” serves as a guiding light, illuminating essential statistical concepts that lay the groundwork for successful A/B testing and data analysis. By enhancing statistical literacy, individuals can harness the power of data, paving the way for improved decision-making in various fields be it marketing, product development, or scientific research.

This article unpacks the fundamental concepts of statistics in testing, diving into elements such as mean, variance, statistical power, p-values, and the distinctions between Bayesian and Frequentist approaches. Additionally, we explore the methodology of A/B testing, emphasizing the importance of a systematic approach in analyzing results. Each section aims to provide thoughtful insights, relatable examples, and practical applications of these concepts, making them accessible for readers of all backgrounds. With these tools, you can transform raw data into actionable insights and improve outcomes in your testing endeavors.

Key Statistical Concepts

Key statistical concepts serve as the backbone of effective analysis and testing. Embracing foundational knowledge enables individuals to navigate the complexities of data interpretation, making informed decisions based on statistical evidence. Let’s dive into the essential concepts that form the foundation of statistical understanding:

- Mean: Often referred to as the average, the mean is the central value of a dataset. Imagine trying to discern the flavor of a fruit salad; while each fruit offers unique taste notes, the mean provides a single flavor that represents the overall dish.

- Variance: Variance measures the spread of data points around the mean, akin to understanding how diverse a fruit salad is in flavor and texture. A high variance indicates a broad range of tastes, while a low variance signifies similarity in flavor.

These concepts are intertwined; understanding the mean helps to establish a baseline, while grasping variance provides insight into variability. By comprehending both, test designers can make informed decisions, ensuring that they derive meaningful insights from testing outcomes.

| Concept | Description | Importance |

| Mean | Average of a dataset | Establishes a central tendency |

| Variance | Measure of data spread from the mean | Indicates reliability of conclusions |

With these foundational concepts, one can approach data analysis more confidently, enhancing the quality of interpretations and the resultant decision-making process.

Understanding Mean and Variance

Understandably, these concepts are often encountered in various forms across a multitude of disciplines. Mean and variance are statistical terms that help describe the behavior of datasets effectively.

- Mean: To calculate the mean, sum all the data points and divide by the total count. For example, in a digital marketing campaign where you receive 50, 30, and 70 clicks on three ads, the mean click rate not only provides insight into performance but serves as a yardstick against which other campaigns can be measured.

- Variance: Variance takes this a step further by quantifying how spread out the numbers are. The mathematical formula involves subtracting the mean from each data point, squaring the result, summing those squares, then dividing by the number of observations minus one. This captures the essence of the data set’s diversity or uniformity, which is critical when anticipating user actions. For instance, in conversion rate tests, knowing the variance helps understand potential discrepancies in user behavior, guiding marketers on how to tailor their strategies effectively.

Using a practical lens, consider a basketball player’s scoring averages: if a player scores similarly in various matches, they have low variance, indicating consistency. Conversely, high variability signifies unpredictability a valuable insight for both players and coaches in strategizing performance.

To summarize, the mean acts as the focal point, illustrating average performance, while variance complements this by showcasing the reliability of that performance and thereby forming a solid foundation for any data-driven analysis.

Importance of Statistical Power in Testing

Statistical power, often overlooked, plays a vital role in hypothesis testing functioning as a safety net to prevent missed opportunities. In simpler terms, statistical power is the probability that a study will correctly reject a false null hypothesis. A test with low power carries the risk of failing to detect an actual effect, akin to having your foot on the gas of a car but not receiving the feedback to steer effectively.

High statistical power (usually set at 0.8 or higher) suggests a robust testing design, meaning that if there is an effect or difference, the test is more likely to identify it. Consider a new product launch: if your sample size is too small, you may miss significant trends that your target market is demonstrating, leading to misinformed strategic decisions.

Key Factors Affecting Statistical Power Include:

- Sample Size: Larger samples typically yield higher power as they provide a more accurate representation of the population, reducing variability.

- Effect Size: This refers to the magnitude of the difference you expect to observe. A larger effect size correlates with higher power, as the distinction becomes clearer amidst the noise.

- Significance Level: The alpha level (commonly set at 0.05) can also influence power. A more stringent significance level may inadvertently decrease power unless other aspects of the study are adjusted.

Overall, a solid grasp of statistical power empowers researchers and decision-makers to craft better testing strategies. By ensuring that various components are aligned, your testing becomes a more reliable pathway to understanding authentic user behavior and drawing actionable insights from data.

Exploration of P-Value Misinterpretations

The p-value has become a buzzword in statistics, but it is often misinterpreted, leading to misguidance in decision-making. At its core, the p-value measures the probability of observing data as extreme as what you’ve collected, given that the null hypothesis is true. Unfortunately, too many confound p-values with the probability that the null hypothesis is correct, which is a common misunderstanding.

For instance, if a study reports a p-value of 0.01, it’s easy to assume that there’s a 1% chance the null hypothesis is true. However, this interpretation is misleading; the p-value is better viewed as a reflection of data rarity under the null hypothesis rather than evidence of the null hypothesis’s truth.

Common Misinterpretations of P-Values Include:

- P-Value as Truth Indicator: Incorrectly inferring that a low p-value offers a definitive answer regarding the null hypothesis’s truth fails to acknowledge the broader context, leading to unsubstantiated conclusions.

- Thresholds and Fixed Interpretations: Relying on arbitrary cutoffs (like 0.05) can oversimplify results, failing to provide nuance to the testing process.

To illustrate, consider a medical trial assessing a new drug. A low p-value might suggest that the drug has a significant effect compared to a placebo. However, that doesn’t mean the drug is conclusively effective it warrants further inquiry into the dataset and other influencing factors.

Understanding the p-value’s true meaning can make a monumental difference. By grasping its implications, decision-makers can steer their analyses with more caution and depth, ultimately leading to improvements in how they interpret outcomes and implement strategies.

Differences Between Bayesian and Frequentist Approaches

In the realm of statistics, understanding the distinctions between Bayesian and Frequentist methodologies is pivotal for making informed choices about which approach to adopt for data analysis. Each framework offers unique perspectives, strengths, and limitations.

- Bayesian Approach: Rooted in Bayes’ theorem, the Bayesian approach uses prior knowledge and evidence to update the probability of a hypothesis as new data arrives. It enables the incorporation of subjective beliefs, providing a flexible mechanism for testing and adaptation. Picture it like tuning a musical instrument ongoing adjustments are made as the performance evolves, continuously fine-tuning the results.

- Frequentist Approach: In contrast, the Frequentist methodology treats probability strictly as the long-term frequency of events based solely on observed data. Decisions are made independently of prior knowledge, emphasizing objective results. This method resembles a referee enforcing rules during a game; the decisions rely on an established framework, ignoring the subjective experiences of players.

Comparative View: Bayesian vs. Frequentist

| Feature | Bayesian | Frequentist |

| **Philosophy** | Probability as a degree of belief | Probability as long-run frequency |

| **Data Use** | Integrates prior information | Utilizes only current data |

| **Flexibility** | Adaptable as new data emerges | Rigid; maintains original hypothesis |

| **Output** | Probabilistic conclusions | P-values and confidence intervals |

| **Complexity** | Requires careful prior selection | Generally simpler to implement |

Both methodologies have their applicability, and the choice between the two hinges on the context of the analysis. When dealing with limited data or when prior information is invaluable, the Bayesian approach emerges as the champion. Meanwhile, for larger datasets where simplicity suffices, the Frequentist perspective resonates more.

Ultimately, a comprehensive understanding of both methodologies arms researchers and analysts with the ability to choose the best tool for the job, enhancing the efficacy of their statistical explorations.

A/B Testing Methodology

In a world saturated with options, A/B testing stands out as a practical approach for identifying what resonates most with users. This methodology allows businesses to compare two versions of a product or webpage and determine which one performs better based on specific metrics.

The essence of A/B testing lies in its structured comparison version A representing the control and version B embodying the variant. An example might involve varying the color of a call-to-action button, with one group receiving the original blue button (A) and another a vibrant orange version (B). By tracking actual clicks and conversions, marketers can derive insights about user preferences.

Key Steps in A/B Testing Include:

- Hypothesis Formulation: Clearly define a hypothesis regarding how the change will improve performance. This sets a focused direction for the analysis.

- Random Sampling: Ensure participants are randomly assigned to either group, eliminating bias and increasing the reliability of results.

- Control and Variant Definition: Establish the control group (A) to compare against the variant group (B), facilitating a direct assessment of changes.

By employing a systematic A/B testing approach, marketers can make informed decisions backed by solid data. Each test provides valuable insights into user preferences and behaviors, ultimately driving enhanced outcomes across various initiatives. Ultimately, A/B testing becomes a critical component for organizations striving to optimize their offerings continuously.

Steps in A/B Testing Process

The A/B testing process is crafted through multiple structured steps that culminate in clear insights and reliable data-driven decisions. Here’s a closer look at each stage involved in this critical methodology:

- Generate Your Hypothesis: Begin by crafting a well-defined hypothesis. It should detail the anticipated outcome of the changes you plan to test. For example, “Changing the website’s header font to a bolder typeface will improve readability and increase conversion rates by 10%.”

- Define Control and Variant Groups: Identify and establish which version will be your control (the original) and which will be the variant (the modified element). This distinction maximizes the clarity of your findings.

- Sample Size Determination: Prior to starting, calculate the required sample size necessary to achieve statistical significance. A larger sample enhances the reliability of results and helps avoid random noise that can skew outcomes.

- Random Sampling Methodology: Randomly assign traffic to both groups to eliminate any biases. This randomness ensures that external factors do not influence the test results.

- Run the A/B Test: Conduct the test by implementing both versions simultaneously. Collect user interactions under comparable conditions to ensure accurate analysis.

- Data Collection and Analysis: After a suitable duration for data collection, analyze performance metrics such as conversion rates, click-through rates, and bounce rates.

- Statistical Analysis: Use statistical methods to ascertain whether the observed changes hold significance. Compare the results from both groups to see if they support or refute the initial hypothesis.

- Session Insights & Iteration: Apply learnings from each test iteration to make necessary adjustments or run additional tests. A/B testing is an ongoing process, and each cycle builds upon previous insights.

By adhering to this structured methodology, businesses can elevate their data-driven decisions, precisely targeting changes that drive more substantial user engagement and conversions.

Defining Control and Variant Groups

To ensure the effectiveness of A/B testing, a crucial step is defining the control and variant groups, which together provide the framework for valid comparisons in your experiment.

- Control Group (A): This group remains untouched, serving as the baseline against which the variant is measured. For instance, if you are testing a new promotional banner, the control group would see the original banner without any adjustments.

- Variant Group (B): This group receives the change you want to evaluate. In our promotional banner example, this group would see the revised banner, potentially with a new design, copy, or call to action.

Why is this distinction vital?

- Baseline Measurement: The control group provides a reference point for understanding how the changes impact user behavior. Without a stable baseline, discerning the significance of differences becomes challenging.

- Bias Elimination: Proper random assignment to these groups minimizes biases and ensures the reliability of results. Users are unaware of the group they belong to, resulting in true randomized behavior.

Analyzing Test Results and Statistical Significance

Interpreting the results of A/B testing hinges on statistical significance, which helps assess whether observed differences between the control and variant groups are due to changes made or merely attributable to random variation.

- Statistical Significance: This concept indicates the likelihood that the results obtained reflect genuine patterns rather than chance occurrences. A common threshold set at p < 0.05 denotes statistical significance, implying less than a 5% probability that the outcomes could occur under the null hypothesis.

- Effect Size: Alongside statistical significance, assessing effect size the magnitude of the difference observed provides crucial context. A statistically significant result that demonstrates minimal impact may be less useful than a moderately significant result showcasing considerable interconnectedness.

- Confidence Intervals: These intervals offer a range of plausible values for the true effect size across the population, further enriching interpretive validity. For instance, a 95% confidence interval can be constructed around the estimated effect, conveying the precision of the measurement.

When data analysis unveils statistically significant results, it indicates that the variant may have a tangible impact on user behavior. However, in-depth interpretation and context are critical without a nuanced understanding of significance, decisions made in haste could lead to missteps.

Application of Statistics in Conversion Rate Optimization

In the competitive world of online marketing, statistics is not just an abstract concept; it is a powerful tool that can significantly enhance conversion rates. By applying statistical methods to analyze user behavior and make data-driven decisions, organizations can systematically improve their performance. This practice, known as Conversion Rate Optimization (CRO), leverages statistics to validate results and drive measurable improvements.

Statistical techniques are employed at various stages of the A/B testing cycle, ensuring that obtained insights are reliable and actionable:

- Hypothesis Testing: Establishing a clear hypothesis is fundamental. Statistics dictate how to frame these hypotheses, enabling analysts to envision potential outcomes clearly and plot a course for testing.

- Data Collection and Analysis: By gathering relevant data, CRO practices can evaluate user behavior objectively. Statistical analysis of click-through rates or bounce rates reveals insights into how effective specific elements are in driving conversions.

- Measuring Statistical Significance: Statistical tests validate whether the differences observed are due to the changes made or random chance. This validation is vital for making informed decisions on scaling or iterating on changes made.

Ultimately, statistics forms the backbone of effective CRO strategies, providing a structured approach to discern what resonates with users. Making decisions based on empirical data rather than intuition leads to more impactful outcomes, fulfilling the overarching goal of optimizing conversion rates.

Utilizing Data Management for Better Insights

The power of effective data management cannot be understated when it comes to drawing meaningful insights within statistics and A/B testing. Proper data management encompasses the organization, storage, and analysis of data, leading to enhanced decision-making capabilities based on accurate information.

- Data Organization: Structuring data in a way that allows for easy retrieval and analysis is essential. Utilizing databases or data analytics tools ensures that data remain clean and accurate, facilitating relevant comparisons and conclusions.

- Data Use in Experimentation: Statistical principles guide A/B testing by providing a structured approach for establishing hypotheses and interpreting results. For instance, defining conversion rates, bounce rates, and average session durations illustrates the metrics that will be utilized for data analysis.

- Tools and Software: Employing modern data management tools makes the analysis insightful and efficient. Robust platforms like Google Analytics or Tableau streamline data collection and enhance visualization, improving the interpretability of statistical outcomes.

- Iterative Learning: A continuous feedback loop from data insights leads to improvement over time. By regularly analyzing performance data and adapting strategies accordingly, businesses can constantly refine their approaches.

By investing in data management practices, organizations can derive actionable insights that inform better decisions. This creates a strategic advantage in the competitive landscape, enabling continuous improvement and optimization over time.

Best Practices for Implementing Tests

Implementation of A/B tests is an essential part of data-driven decision-making, yet it must be executed with care. By adhering to best practices, organizations can maximize the effectiveness of their testing methodologies while circumventing common pitfalls.

- Define Success Metrics: It is vital to establish clear and specific metrics that will indicate success. These can include conversion rates, average order values, or user engagement metrics clear guidelines facilitate effective analysis.

- Test One Variable at a Time: Focusing on a single variable allows teams to isolate the effects of each change. For example, if a business tests multiple changes simultaneously, it becomes impossible to discern which variable influenced results the most.

- Ensure Randomization: Randomly assigning participants to control and variant groups reduces biases, promoting the validity of outcomes. This means each user should have an equal chance of being assigned to either group.

- Sufficient Sample Size: Calculating an adequate sample size prior to testing ensures that the results have statistical power. Small samples may yield unreliable results, leading to erroneous conclusions.

- Document Learnings: After completing any A/B test, analyzing both successful and unsuccessful outcomes solidifies the foundation for iterative learning. Proper documentation fosters continuous improvement by refining future testing efforts.

By integrating these best practices into A/B testing, teams can enhance their methodologies, leading to actionable insights and decision-making that truly reflect user preferences and behaviors. Ultimately, well-implemented tests yield rich dividends, paving the way for data-driven success.

Common Pitfalls to Avoid in Testing

Even with the best intentions, A/B testing can fall victim to common pitfalls that undermine the integrity of results. Understanding these missteps can help organizations navigate their testing efforts more effectively.

- Misinterpretation of Results: One of the most prevalent challenges is misconstruing statistical significance. Poorly designed studies may lead teams to falsely conclude that trivial differences signify substantial effects.

- Inadequate Sample Size: Running tests on too few users risks failing to observe statistically significant outcomes. An insufficient sample clouds the reliability of data and weakens conclusions.

- Ignoring Outside Factors: Sometimes, external influences (like seasonality or special events) may skew results. Not accounting for these variables can mislead analysis and lead to misguided decisions.

- Failure to Analyze Post-Test Insights: After completing a test, neglecting to delve into the ‘why’ behind the results can hinder future improvements. Reflecting on outcomes enriches learning experiences and informs better testing designs.

- Testing Multiple Variables Simultaneously: Conducting multivariate tests complicates analysis due to interdependencies among changes. Isolating the effects of each variable is pivotal for accurate evaluation.

By steering clear of these common pitfalls, organizations can enhance the quality and credibility of their A/B testing efforts. Each refined process contributes to more trustworthy outcomes, ultimately empowering better business decisions.

Course Insights and Learnings

Ben Labay’s “Statistics Fundamentals for Testing” provides numerous insights that significantly contribute to understanding statistics’ pivotal role in effective testing and decision-making. Below are some key takeaways that emerge from the course.

- Emphasis on Core Concepts: The course underlines the importance of foundational statistical concepts such as mean, variance, and significance levels. These elements are crucial for grasping how statistical testing operates and aids comprehension of experimental outcomes.

- Understanding Statistical Power: Labay emphasizes that understanding statistical power is essential in designing experiments effectively. High statistical power reduces the likelihood of Type II errors, allowing analysts to detect genuine effects.

- Navigating Misinterpretations: The course prioritizes clarifying the often misused p-value concept. By shining a spotlight on common misinterpretations, Labay equips students with the tools to make more informed, data-driven decisions.

- Application to A/B Testing: The relevance of statistical principles in A/B testing is thoroughly explored. Labay provides insights into designing, executing, and analyzing tests while ensuring accurate interpretation of results.

- Importance of Iteration and Learning: Continuous improvement is a recurring theme throughout the course. Each test should inform customer insights and strategy alterations, contributing to long-term growth and enhanced decision-making.

Labay’s course delivers a comprehensive foundation for understanding statistics as a strategic tool in testing, allowing businesses to leverage these insights to improve engagement and optimize performance effectively.

Comprehensive Breakdown of Course Content

“Statistics Fundamentals for Testing” by Ben Labay meticulously covers various statistical concepts crucial for effective testing methodologies. Each component builds upon the last, ensuring a comprehensive understanding of how statistics informs decisions. Here’s a breakdown that outlines the main content covered in the course.

- Introduction to Statistics: The foundation begins with basic statistical terminology such as mean, median, and mode. Grasping these concepts allows learners to interpret data correctly.

- Data Distribution and Measures of Variability: Variance and standard deviation are thoroughly discussed, inviting students to explore how spread impacts interpretations.

- Hypothesis Testing: An exploration into formulating null and alternative hypotheses, which is integral to structured testing and informed decision-making.

- Interpreting P-Values and Outcome Statistics: Labay emphasizes understanding what p-values indicate about confidence in results and how statistical significance impacts conclusions.

- Practical A/B Testing Applications: The course concludes with concrete applications, showcasing real-world examples and methodologies for directing A/B tests effectively in digital marketing.

- Addressing Common Pitfalls: Awareness of frequent mistakes in statistical testing guides learners toward more reliable experimental practices.

This comprehensive approach equips students with the foundational tools needed to navigate the complexities of statistical analysis effectively, leading to more robust data-driven decision-making in their respective disciplines.

Key Takeaways from Ben Labay’s Instruction

Ben Labay’s teaching style amalgamates theoretical frameworks with practical applications, promoting an engaging learning experience. Here are some important takeaways that underscore the value of his instruction in statistics for testing:

- Statistical Insights as Decision-Making Tools: Labay encourages students to view statistical insights not just as academic constructs but as practical tools that aid real-world decision-making.

- Emphasis on Experimental Design: The importance of designing rigorous experiments to uncover genuine effects is highlighted. A methodical approach lays the groundwork for effective analysis.

- Critical Thinking and Problem-Solving: Labay’s course fosters an environment where learners are encouraged to engage critically with data, inspiring them to approach problems analytically and seek robust solutions.

- Application of Techniques Across Industries: The principles taught are applicable not just in marketing but across various fields such as healthcare, finance, and technology, amplifying their relevance.

- Continuous Learning: Labay emphasizes that learning statistics is an ongoing journey, advocating for a culture of iterative improvements as one applies newfound insights in real-world settings.

By synthesizing theoretical understanding with practical applications, Labay’s instruction becomes a valuable resource for those pursuing proficiency in statistics, particularly in the context of testing and data analysis.

Recap of Statistical Concepts in Practical Scenarios

Statistics is not merely a realm of abstract numbers; it finds extensive applications in practical scenarios that drive informed decision-making. Ben Labay’s course underscores key statistical concepts that can significantly impact outcomes across various disciplines.

- Mean and Variance in Marketing Campaigns: When analyzing a marketing campaign, marketers can use the mean to assess average engagement during the campaign’s duration and variance to evaluate fluctuations in user responses. This informs future marketing strategy improvements by revealing what resonated with audiences.

- Statistical Power in Healthcare Studies: In clinical trials, ensuring statistical power is critical. By adequately planning sample size and understanding effect sizes, researchers can avoid Type II errors where genuine treatment effects go undetected, impacting patient care.

- P-Values in Research Findings: Within scientific studies, p-values inform whether results are statistically significant. An understanding of how to interpret these values underpins the credibility of research outcomes and supports sound conclusions.

- A/B Testing in User Experience: User experience teams leverage A/B testing to refine site interfaces, modifying elements like button color for optimal engagement. Statistical analysis illuminates what changes yield better conversion rates, contributing to enhanced usability.

In conclusion, the practical application of statistical concepts extends beyond a classroom setting these principles echo in the corridors of marketing, healthcare, and research, ensuring that informed decision-making becomes a cornerstone of operational practices.

Further Learning and Resources

Embarking on the journey of statistical enlightenment calls for continual learning beyond existing frameworks. There exists a wealth of additional resources tailored to enhance understanding while applying these concepts effectively in real-world scenarios.

- Online Courses: Platforms such as Coursera and edX offer extensive courses in statistics and data analysis, from beginner levels to advanced statistical modeling. These platforms provide flexible learning environments that cater to different learning preferences.

- Reading Materials: Books like “The Art of Statistics” by David Spiegelhalter invite readers to explore statistics through engaging narratives, grounding concepts in everyday contexts. They serve as great supplementary materials alongside academic courses.

- Webinars and Workshops: Participating in webinars hosted by industry professionals can supplement your learning. These often focus on specific applications of statistics across sectors, providing tailored insights.

- Statistical Software Tutorials: Gaining proficiency in statistical software (such as R, Python, or SPSS) is instrumental. Online tutorials can give hands-on experience using these tools to perform analyses, interpret results and visualize data effectively.

Recommended Additional Courses on Statistics

To deepen statistical understanding beyond Ben Labay’s course, consider exploring these recommended courses that align with his teachings and broaden your knowledge base:

- “Introduction to Data Science” – Offered by Johns Hopkins University, this course covers statistical analysis, data visualization, and machine learning fundamentals.

- “Statistics with R” – A Coursera specialization focusing on statistical analysis and interpretation using R, allowing practical application of statistical concepts in coding.

- “Applied Data Science with Python” – Offered by the University of Michigan, this specialization dives into applied statistics and data science techniques using Python.

- “Foundations of Data Science” – Aimed at introducing statistical tools necessary for data analysis while emphasizing the application of theoretical understanding in data-centric projects.

- “Statistics for Managers” – This course provides business professionals with practical statistics intertwined with decision-making processes relevant to their roles.

These courses are designed to provide a well-rounded comprehension of statistics that aligns with both applied settings and scientific inquiry.

Useful Tools for Data Analysis and Interpretation

Leveraging the right tools can significantly enhance the effectiveness of data analysis and interpretation in statistical contexts. Here’s a compilation of some pivotal tools that can aid in performing robust data analyses:

- R and RStudio: As an open-source programming language, R is widely used for statistical computing and graphics. It provides extensive packages for data analysis, allowing users to perform complex statistical tests.

- Python with Libraries (Pandas, NumPy, SciPy): Python, coupled with its powerful libraries, offers a versatile framework for performing statistical analyses and executing data operations. It’s ideal for those looking to integrate statistical analysis into broader programming practices.

- Tableau: Known for its user-friendly interface, Tableau is a powerful data visualization tool allowing users to turn complex data into visually engaging dashboards.

- Google Analytics: A fundamental tool for tracking user interactions on websites, Google Analytics provides built-in statistical tools for analyzing the effectiveness of A/B tests and other marketing campaigns.

- Excel: Despite its simplicity, Excel remains one of the most accessible tools for conducting basic statistical analyses and manipulating datasets at a smaller scale.

These tools can enhance the analysis of statistically driven data, empowering professionals to derive actionable insights that improve outcomes in testing and beyond.

Suggested Readings for Advanced Statistical Knowledge

To further cultivate your understanding and enhance your knowledge of statistics, consider the following suggested readings that span both foundational concepts and advanced methodologies:

- “Statistics: The Art and Science of Learning from Data” by Alan Agresti and Christine Franklin: This book serves as an accessible introduction to statistical concepts, blending theoretical knowledge with practical applications.

- “Bayesian Data Analysis” by Andrew Gelman et al.: A comprehensive resource that delves deeply into Bayesian statistics, revealing advanced methodologies and applications in practical scenarios.

- “The Data Warehouse Toolkit” by Ralph Kimball: While more focused on data warehousing than statistics per se, this book provides insights into handling and interpreting structured data, important for statistical applications.

- “Practical Statistics for Data Scientists” by Peter Bruce and Andrew Bruce: This text bridges statistics and practical applications in data science, offering insights into core statistical concepts and real-world use cases.

- “What If? Serious Scientific Answers to Absurd Hypothetical Questions” by Randall Munroe: Engaging and fun, this book provides creative insights into how statistics can be applied to quirky hypothetical scenarios that often elicit deeper thinking about data interpretation.

These readings will enrich your understanding of statistical analysis, providing perspectives that resonate across both theoretical frameworks and practical applications, reinforcing the role statistics play in addressing real-world challenges.

By utilizing the resources outlined here, you will cultivate a deeper understanding of statistics. Education in statistics fosters a more robust approach to decision-making, enabling individuals and organizations to navigate data with confidence.

In the end, the foundation laid by Ben Labay’s instruction is just the beginning of an empowering journey into the world of statistics, testing, and data analysis.

Frequently Asked Questions:

Business Model Innovation:

Embrace the concept of a legitimate business! Our strategy revolves around organizing group buys where participants collectively share the costs. The pooled funds are used to purchase popular courses, which we then offer to individuals with limited financial resources. While the authors of these courses might have concerns, our clients appreciate the affordability and accessibility we provide.

The Legal Landscape:

The legality of our activities is a gray area. Although we don’t have explicit permission from the course authors to resell the material, there’s a technical nuance involved. The course authors did not outline specific restrictions on resale when the courses were purchased. This legal nuance presents both an opportunity for us and a benefit for those seeking affordable access.

Quality Assurance: Addressing the Core Issue

When it comes to quality, purchasing a course directly from the sale page ensures that all materials and resources are identical to those obtained through traditional channels.

However, we set ourselves apart by offering more than just personal research and resale. It’s important to understand that we are not the official providers of these courses, which means that certain premium services are not included in our offering:

- There are no scheduled coaching calls or sessions with the author.

- Access to the author’s private Facebook group or web portal is not available.

- Membership in the author’s private forum is not included.

- There is no direct email support from the author or their team.

We operate independently with the aim of making courses more affordable by excluding the additional services offered through official channels. We greatly appreciate your understanding of our unique approach.

Be the first to review “Statistics Fundamentals for Testing with Ben Labay” Cancel reply

You must be logged in to post a review.

Related products

Marketing

Reviews

There are no reviews yet.